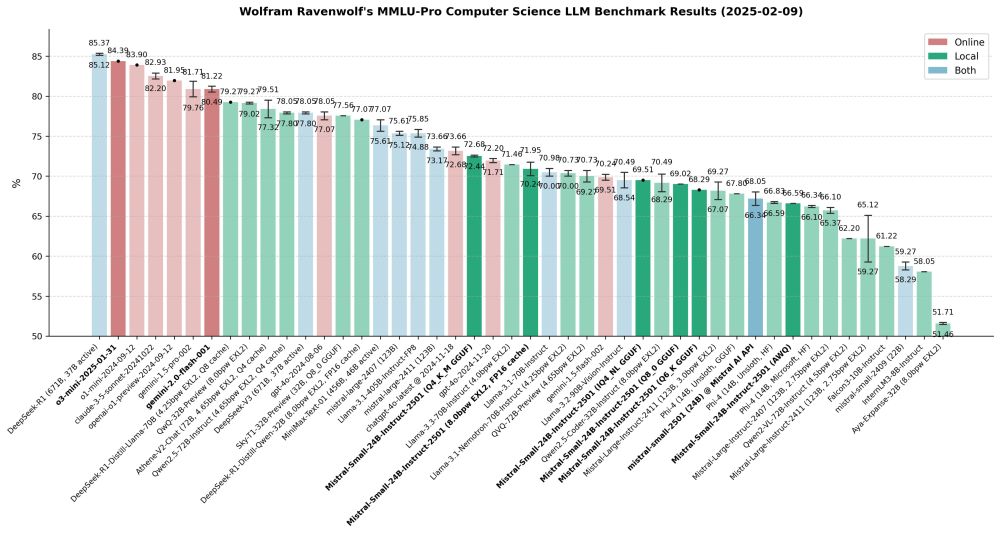

Here's a quick update on my recent work: Completed MMLU-Pro CS benchmarks of o3-mini, Gemini 2.0 Flash and several quantized versions of Mistral Small 2501 and its API. As always, benchmarking revealed some surprising anomalies and unexpected results worth noting:

Mistral-Small-24B-Instruct-2501 is amazing for its size, but what's up with the quants? How can 4-bit quants beat 8-bit/6-bit ones and even Mistral's official API (which I'd expect to be unquantized)? This is across 16 runs total, so it's not a fluke, it's consistent! Very weird!

Feb 10, 2025 22:38