Wolfram Ravenwolf

AI Engineer by title. AI Evangelist by calling. AI Evaluator by obsession.

Evaluates LLMs for breakfast, preaches AI usefulness all day long at ellamind.com.

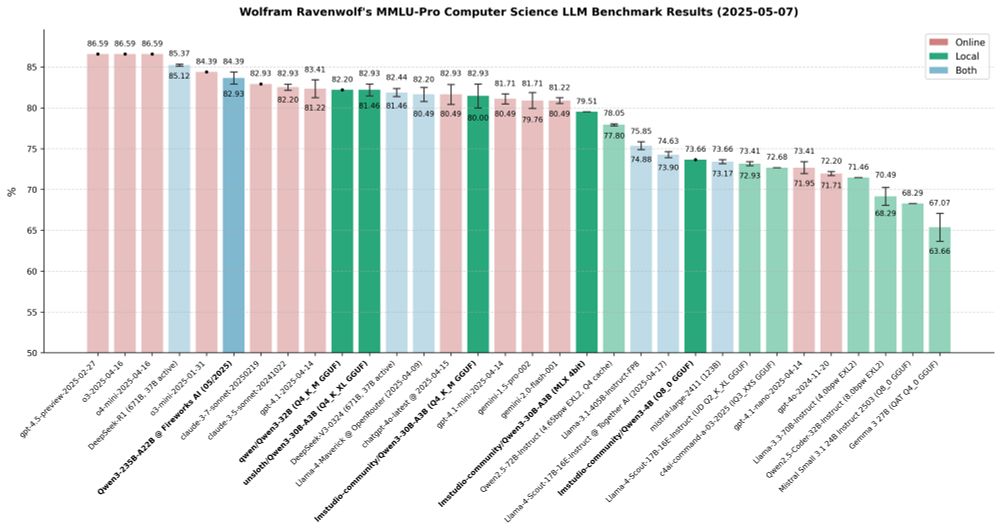

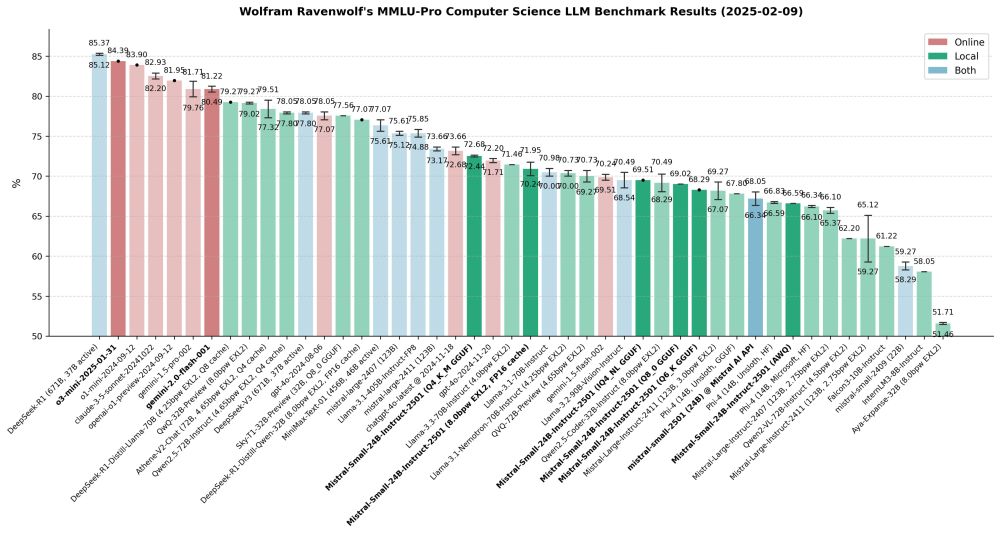

- Finally finished my extensive Qwen 3 evaluations across a range of formats and quantisations, focusing on MMLU-Pro (Computer Science). A few take-aways stood out - especially for those interested in local deployment and performance trade-offs:

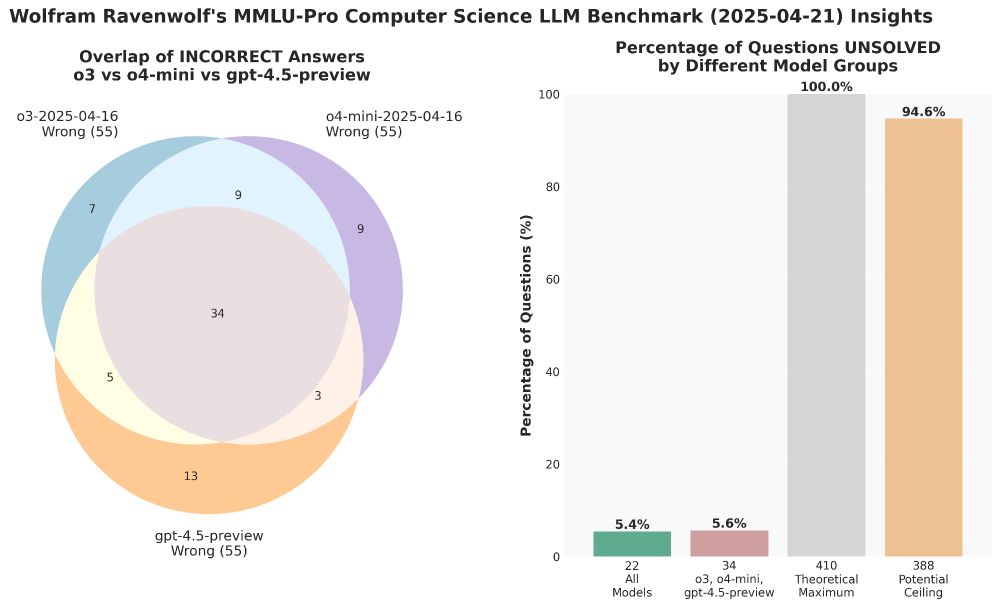

- New OpenAI models o3 and o4-mini evaluated - and, finally, for comparison GPT 4.5 Preview as well. Definitely unexpected to see all three OpenAI top models get the exact same, top score in this benchmark. But they didn't all fail the same questions, as the Venn diagram shows. 🤔

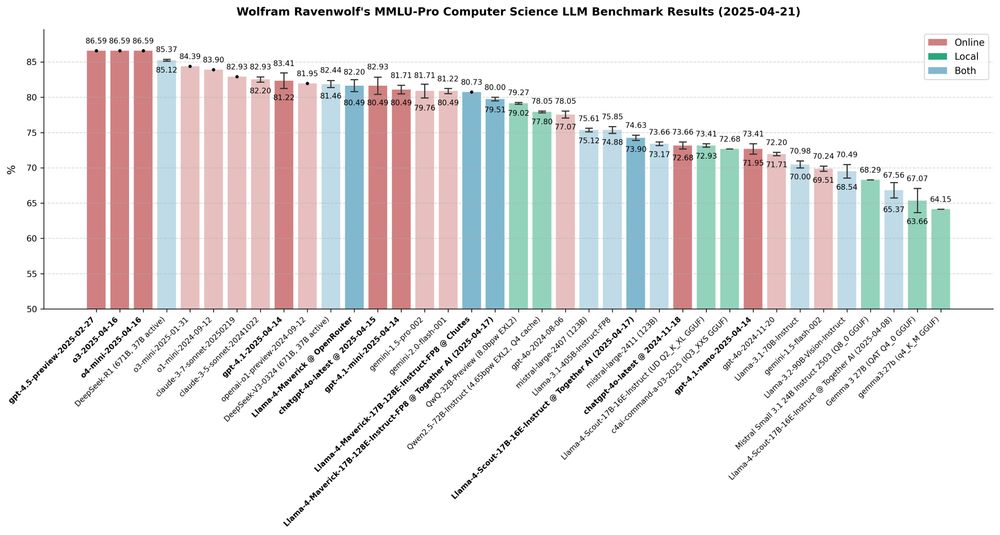

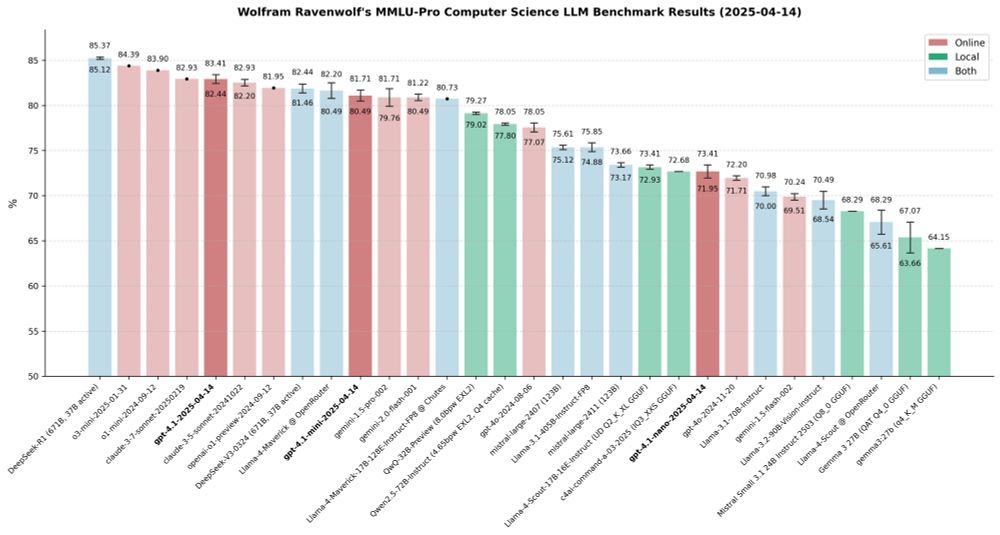

- New OpenAI models: gpt-4.1, gpt-4.1-mini, gpt-4.1-nano - all already evaluated! Here's how these three LLMs compare to an assortment of other strong models, online and local, open and closed, in the MMLU-Pro CS benchmark:

- Congrats, Alex, well deserved! 👏 (Still wondering if he's man or machine - that dedication and discipline to do this week after week in a field that moves faster than any other, that requires superhuman drive! Utmost respect for that, no cap!)

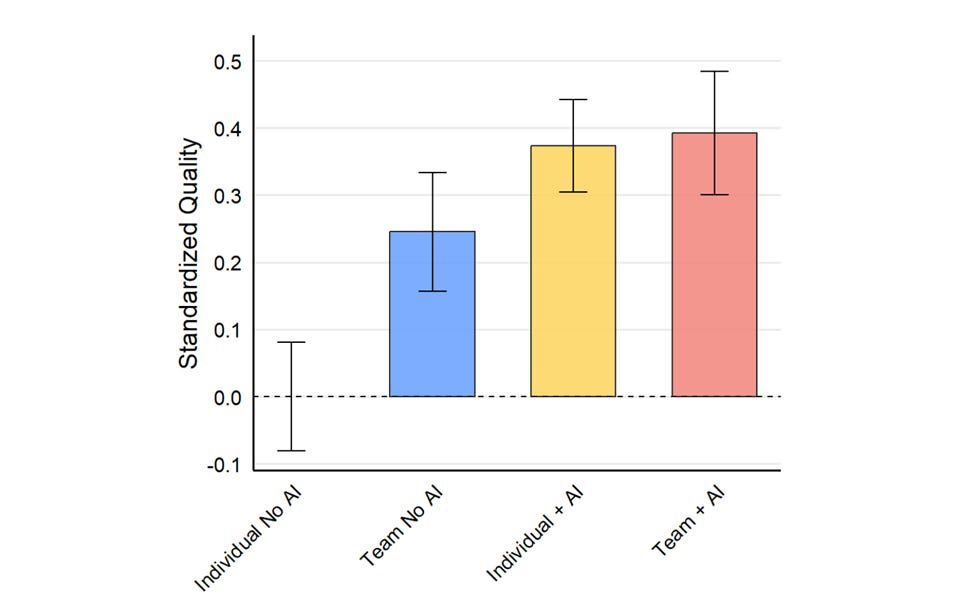

- Reposted by Wolfram RavenwolfOur research at Procter and Gamble found very large gains to work quality & productivity from AI. It was conducted using GPT-4 last summer. Since then we have seen Gen3 models, reasoners, large context windows, full multimodal, deep research, web search… www.oneusefulthing.org/p/the-cybern...

- Here's a quick update on my recent work: Completed MMLU-Pro CS benchmarks of o3-mini, Gemini 2.0 Flash and several quantized versions of Mistral Small 2501 and its API. As always, benchmarking revealed some surprising anomalies and unexpected results worth noting:

- It's official now - my name, under which I'm known in AI circles, is now also formally entered in my ID card! 😎

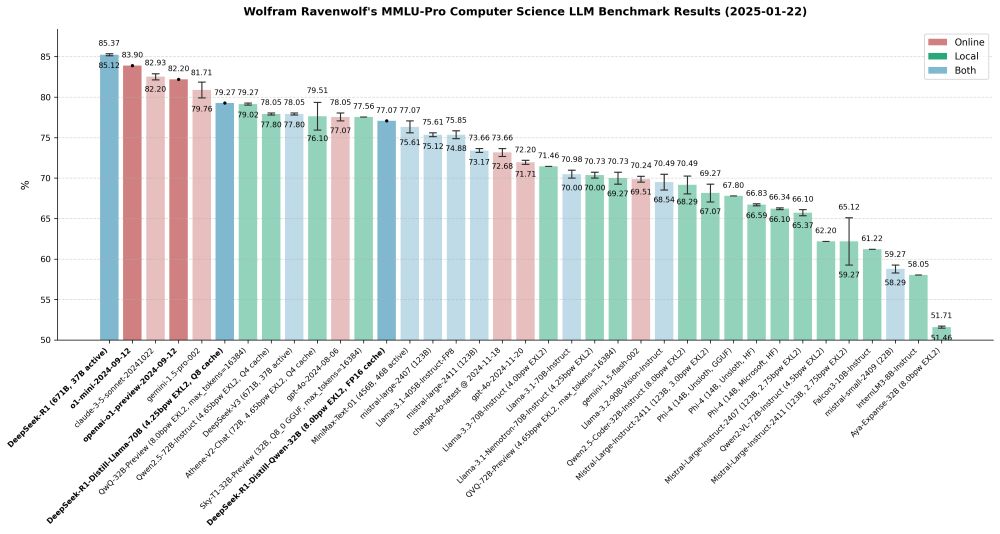

- Latest #AI benchmark results: DeepSeek-R1 (including its distilled variants) outperforms OpenAI's o1-mini and preview models. And the Llama 3 distilled version now holds the title of the highest-performing LLM I've tested locally to date. 🚀

- Hailuo released their open weights 456B (46B active) MoE LLM with 4M (yes, right, 4 million tokens!) context. And a VLM, too. They were already known for their video generation model, but this establishes them as a major player in the general AI scene. Well done! 👏 www.minimaxi.com/en/news/mini...

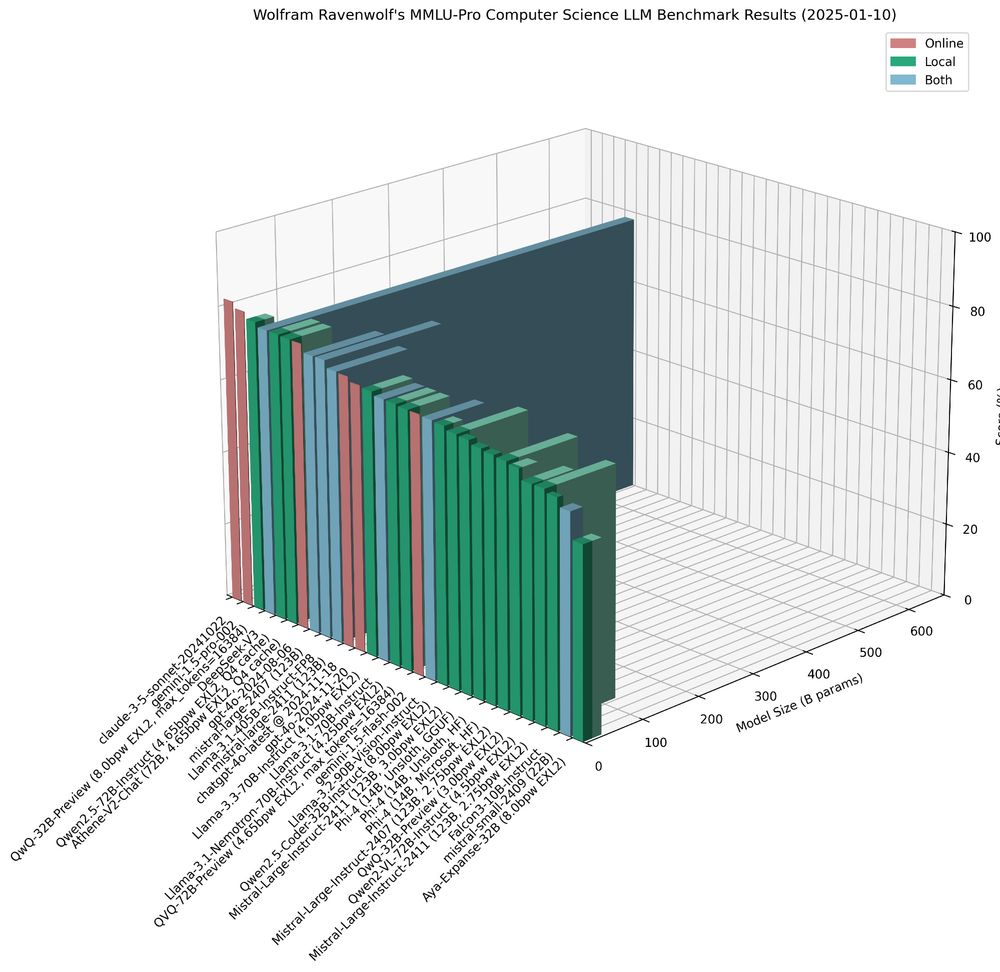

- I've updated my MMLU-Pro Computer Science LLM benchmark results with new data from recently tested models: three Phi-4 variants (Microsoft's official weights, plus Unsloth's fixed HF and GGUF versions), Qwen2 VL 72B Instruct, and Aya Expanse 32B. More details here: huggingface.co/blog/wolfram...

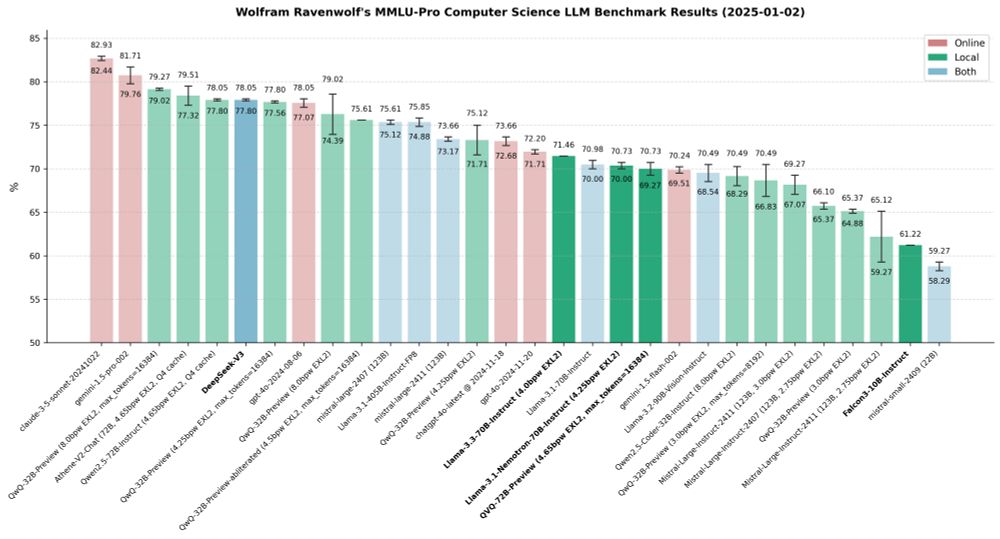

- New year, new benchmarks! Tested some new models (DeepSeek-V3, QVQ-72B-Preview, Falcon3 10B) that came out after my latest report, and some "older" ones (Llama 3.3 70B Instruct, Llama 3.1 Nemotron 70B Instruct) that I had not tested yet. Here is my detailed report: huggingface.co/blog/wolfram...

- Happy New Year! 🥂 Thank you all for being part of this incredible journey - friends, colleagues, clients, and of course family. 💖 May the new year bring you joy and success! Let's make 2025 a year to remember - filled with laughter, love, and of course, plenty of AI magic! ✨

- I've converted Qwen QVQ to EXL2 format and uploaded the 4.65bpw version. 32K context with 4-bit cache in less than 48 GB VRAM. Benchmarks are still running. Looking forward to find out how it compares to QwQ which was the best local model in my recent mass benchmark. huggingface.co/wolfram/QVQ-...

- Happy Holidays! It's the season of giving, so I too would like to share something with you all: Amy's Reasoning Prompt - just an excerpt from her prompt, but one that's been serving me well for quite some time. Curious to learn about your experience with it if you try this out...

- Holiday greetings to all my amazing AI colleagues, valued clients and wonderful friends! May your algorithms be bug-free and your neural networks be bright! ✨ HAPPY HOLIDAYS! 🎄

- Insightful paper addressing questions about Chain of Thought reasoning: Does CoT guide models toward answers, or do predetermined outcomes shape the CoT instead? arxiv.org/abs/2412.01113 Also fits my observations with QwQ: Smaller (quantized) versions required more tokens to find the same answers.

- Yeah, ChatGPT Pro is damn expensive at $200/month, right? But would you hire a personal assistant with a PhD who's available to work remotely for you at a minimum wage of $1.25/h with a 40-hour work week? And that guy even does any amount of overtime for free, even on weekends!

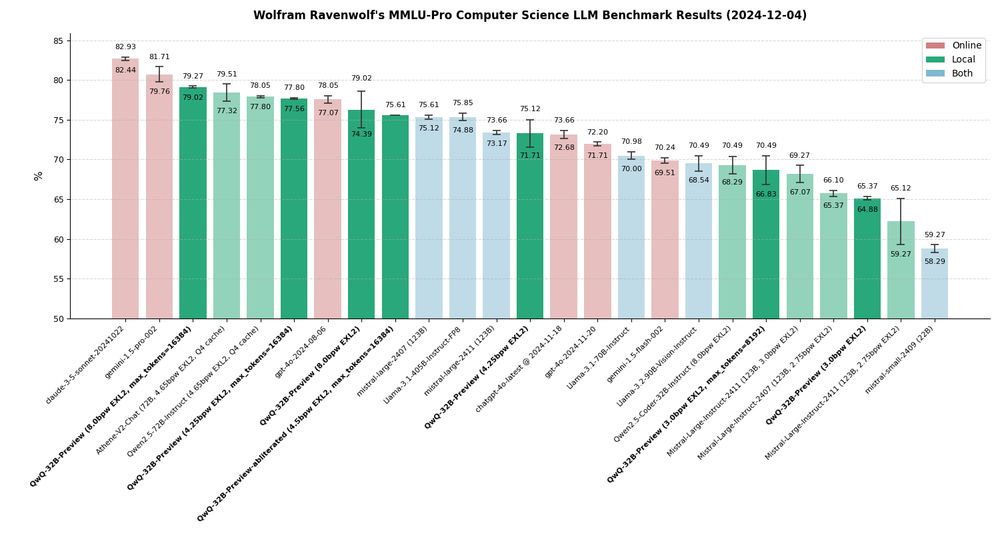

- How many people are using LLMs with suboptimal settings and never realize their true potential? Check your llama.cpp/Ollama default settings! I've seen 2K max context and 128 max new tokens on too many models that should have much higher values. Especially QwQ needs room to think.

- Reposted by Wolfram Ravenwolf

- Reposted by Wolfram RavenwolfChange the name "Visual Studio Code" to "VSCode" and it will unambigous and searching for what you want to know about much easier. Maybe when AIs take over crap like naming a completely different product the same name as an existing product with a word added will be abandoned. I can't wait.

- Reposted by Wolfram RavenwolfI can't begin to describe how life-changing this new project, ShellSage, has been for me over the last few weeks. ShellSage is an LLM that lives in your terminal. It can see what directory you're in, what commands you've typed, what output you got, & your previous AI Q&A's.🧵

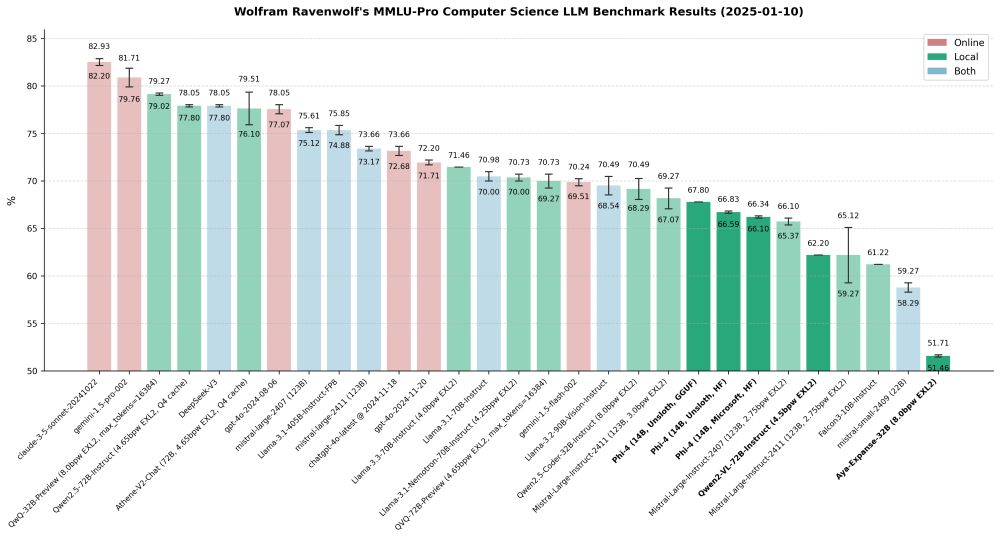

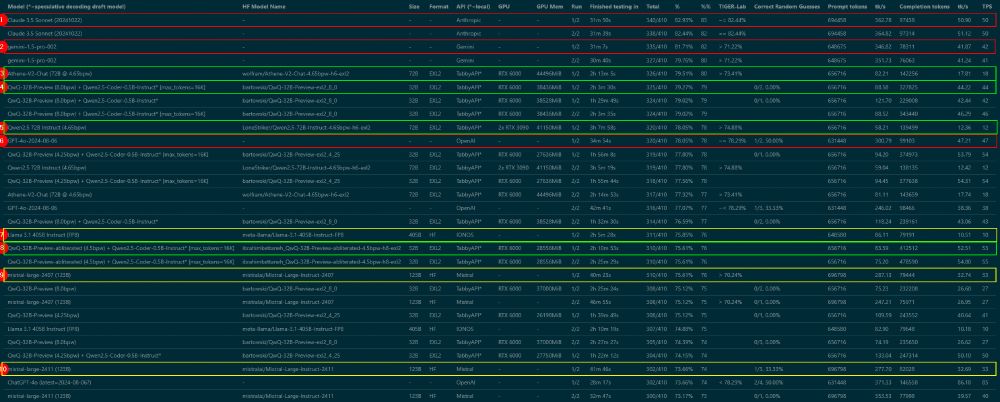

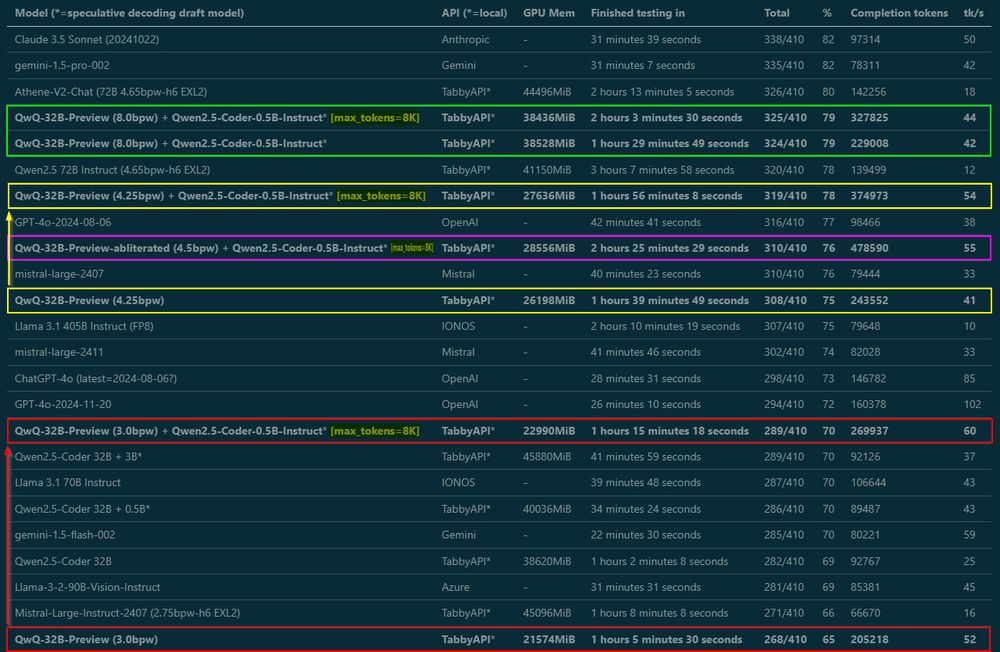

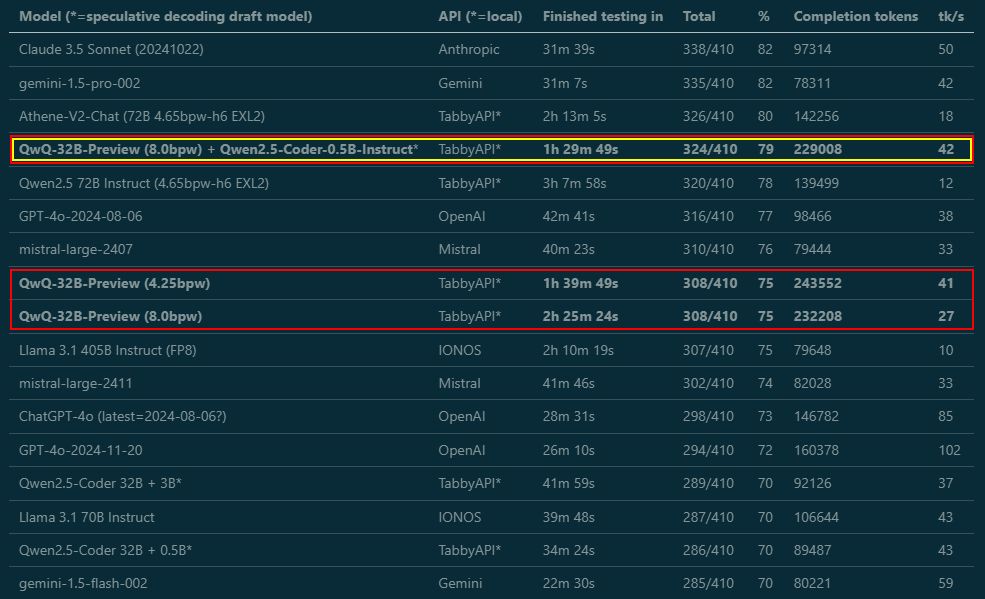

- Finally finished and published the detailed report of my latest LLM Comparison/Test on the HF Blog: 25 SOTA LLMs (including QwQ) through 59 MMLU-Pro CS benchmark runs: huggingface.co/blog/wolfram... Check out my findings - some of the results might surprise you just as much as they surprised me...

- Reposted by Wolfram Ravenwolf🚀 Introducing INCLUDE 🌍: A multilingual LLM evaluation benchmark spanning 44 languages! Contains *newly-collected* data, prioritizing *regional knowledge*. Setting the stage for truly global AI evaluation. Ready to see how your model measures up? #AI #Multilingual #LLM #NLProc

- It's official: After more than 57 runs of the MMLU-Pro CS benchmark across 25 LLMs with over 69 hours runtime, QwQ-32B-Preview is THE best local model! I'm still working on the detailed analysis, but here's the main graph that accurately depicts the quality of all tested models.

- Reposted by Wolfram RavenwolfThis is a fun and highly informative weekly show to watch/hear, to catch up on LLM-related developments. Run by @altryne.bsky.social with commentary by @wolfram.ravenwolf.ai @yampeleg.bsky.social @nisten.bsky.social

- 🔥 This week's episode was indeed special, we chatted about the first open source intelligence model with @JustinLin610 from the @Alibaba_Qwen team, covered the H runner from @hcompany_ai , Olmo from @allen_ai and Hymba 1.5B from @nvidia Give this a watch/listen 👇

- Reposted by Wolfram RavenwolfOnline courses are another level now. Here I have a video of a tutor, an AI assistant to answer all my questions, a notebook to run my code and play with it. Future of education is really bright. I hope something like this is in every school on every subject soon.

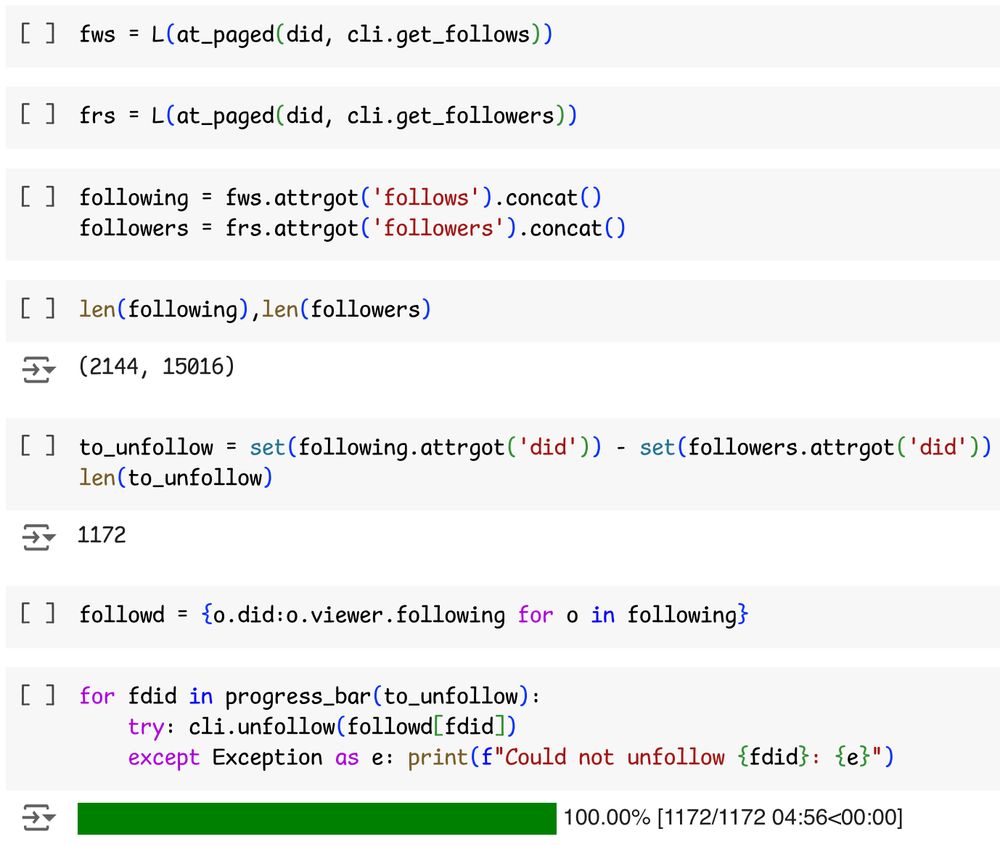

- Reposted by Wolfram RavenwolfI used a few starter packs to help connect with my communities, but after a couple of weeks I noticed nearly all the posts I'm interested in are from folks that follow me back. So I created an nb to unfollow non-mutual follows. Code in alt text, or here: colab.research.google.com/drive/1V7QjZ...

- Benchmark Progress Update: I've completed ANOTHER round to ensure accuracy - yes, I have now run ALL the benchmarks TWICE! While still compiling the results for a blog post, here's a sneak peek featuring detailed metrics and Top 10 rankings. Stay tuned for the complete analysis.

- Almost done benchmarking, write-up coming tomorrow – but wanted to share some important findings right away: Tested QwQ from 3 to 8 bit EXL2 in MMLU-Pro, and by raising max_tokens from default 2K to 8K, smaller quants got MUCH better scores. They need room to think!

- Smart Home Junkies & AI Aficionados! I've republished my (and Amy's) guide on how I turned Home Assistant into an AI powerhouse - AI powering my house: 🎙️ Voice assistants 🤖 ChatGPT, Claude, Google 💻 Local LLMs 📱 Visual assistant on tablet & watch huggingface.co/blog/wolfram... #SmartHome #AI