Epoch AI

We are a research institute investigating the trajectory of AI for the benefit of society.

epoch.ai

- We're hiring! Epoch AI is looking for a Head of Web Development. In this role, you would: - Help communicate our research while managing sustainable infrastructure - Manage a team of 3-5 engineers - Align our tech stack with the organization's long-term goals

- We’re hiring an Engineering Lead to help guide our Benchmarking team! Provide independent evaluations of today’s and tomorrow’s AI models, leading to better research, policy, and decision-making. The role is fully remote, and applications are rolling.

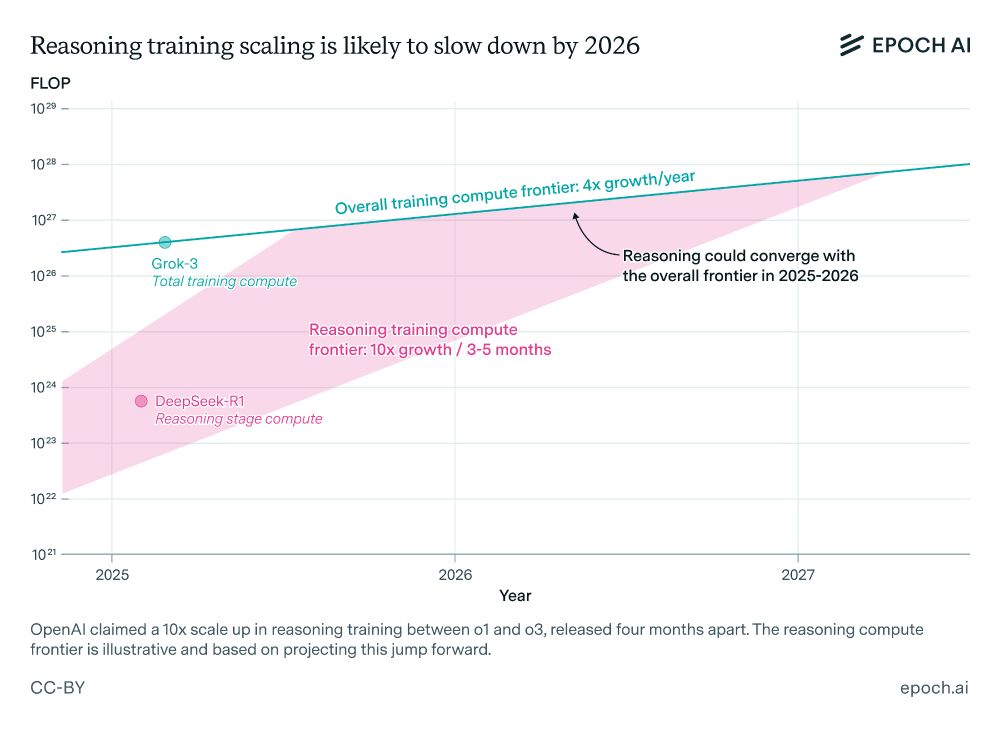

- Can AI developers continue scaling up reasoning models like o3? @justjoshinyou.bsky.social reviews the available evidence in this week’s Gradient Update, and it appears that the rapid scaling of reasoning training, like the jump from o1 to o3, will likely slow down in a year or so.

- Training frontier AI models requires a lot of power — but how much? After updating our analysis, we find that frontier training runs use on average 1.5x more energy than we previously thought. The pace of growth – 2.1x per year – is unchanged. Details below 🧵

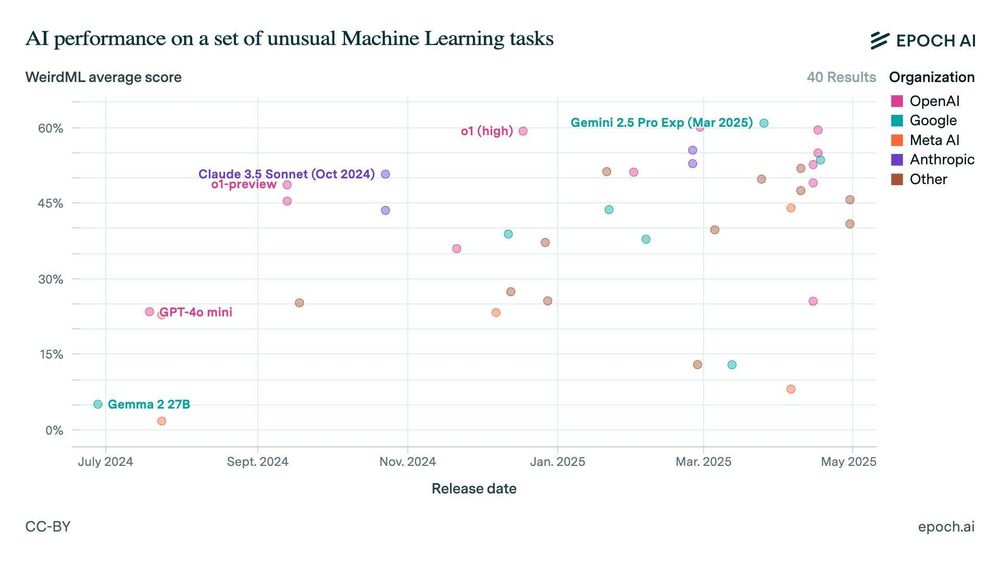

- We’ve added four new benchmarks to the Epoch AI Benchmarking Hub: Aider Polyglot, WeirdML, Balrog, and Factorio Learning Environment! Before we only featured our own evaluation results, but this new data comes from trusted external leaderboards. And we've got more on the way 🧵

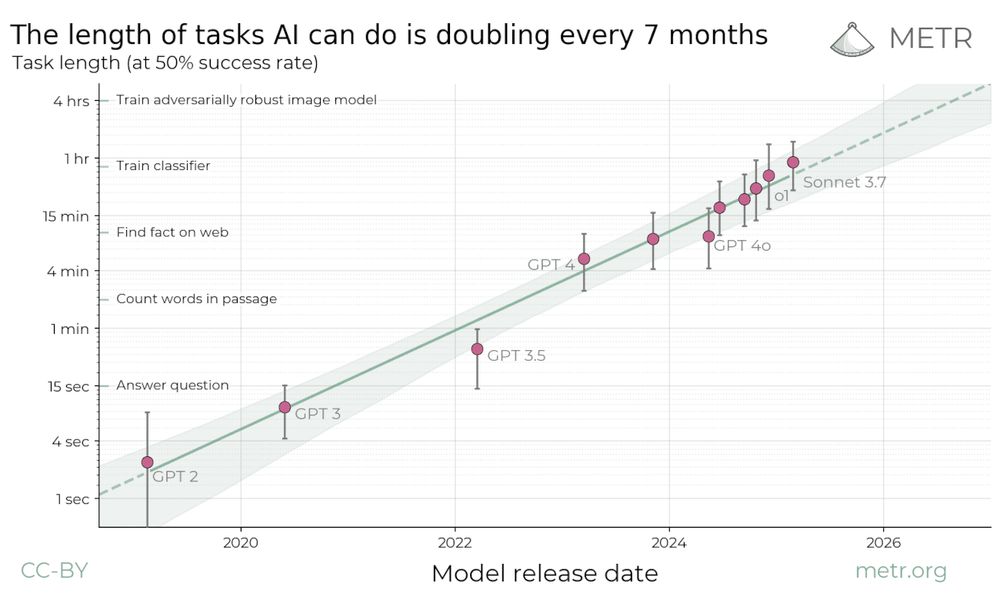

- Where’s my ten-minute AGI? If AI systems are able to perform tasks at 1-hour time horizons, as @metr.org found, why hasn’t AI automated more tasks already? In a new Gradient Update, Anson Ho argues this is due to three fundamental reasons.🧵

- We've documented over 500 AI supercomputing clusters from 2019 onward. Among leading AI supercomputers, performance has been increasing at a rate of 2.5x per year. Thread of our insights 🧵