mattdesl

artist, coder

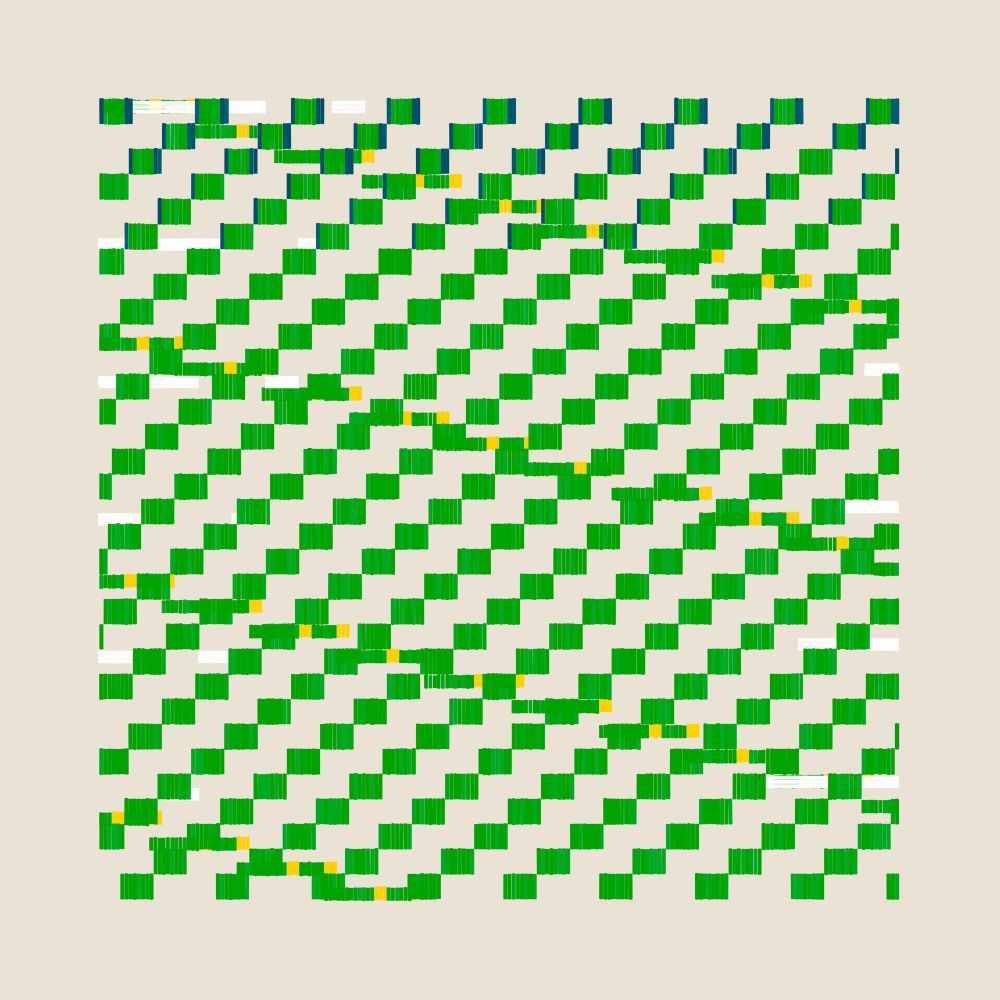

- Research/experiments building an OSS implementation of practical and real-time Kubelka-Munk pigment mixing. Not yet as good as Mixbox, but getting closer. Comparing LUT (32x32x32 stored in PNG) vs a small neural net (2 hidden layers, 16 neurons).

- [Not loaded yet]

- What I’m working on is a bit more homebrew, tailored specifically for a project I’m working on. The pigment curves are optimized to match physically measured spectral data, and the mixing model is pretty different than spectraljs’ approach.

- using an evolutionary algorithm to paint Mona Lisa in 200 rectangles— 🔧 source code in JS: github.com/mattdesl/snes

- still image—

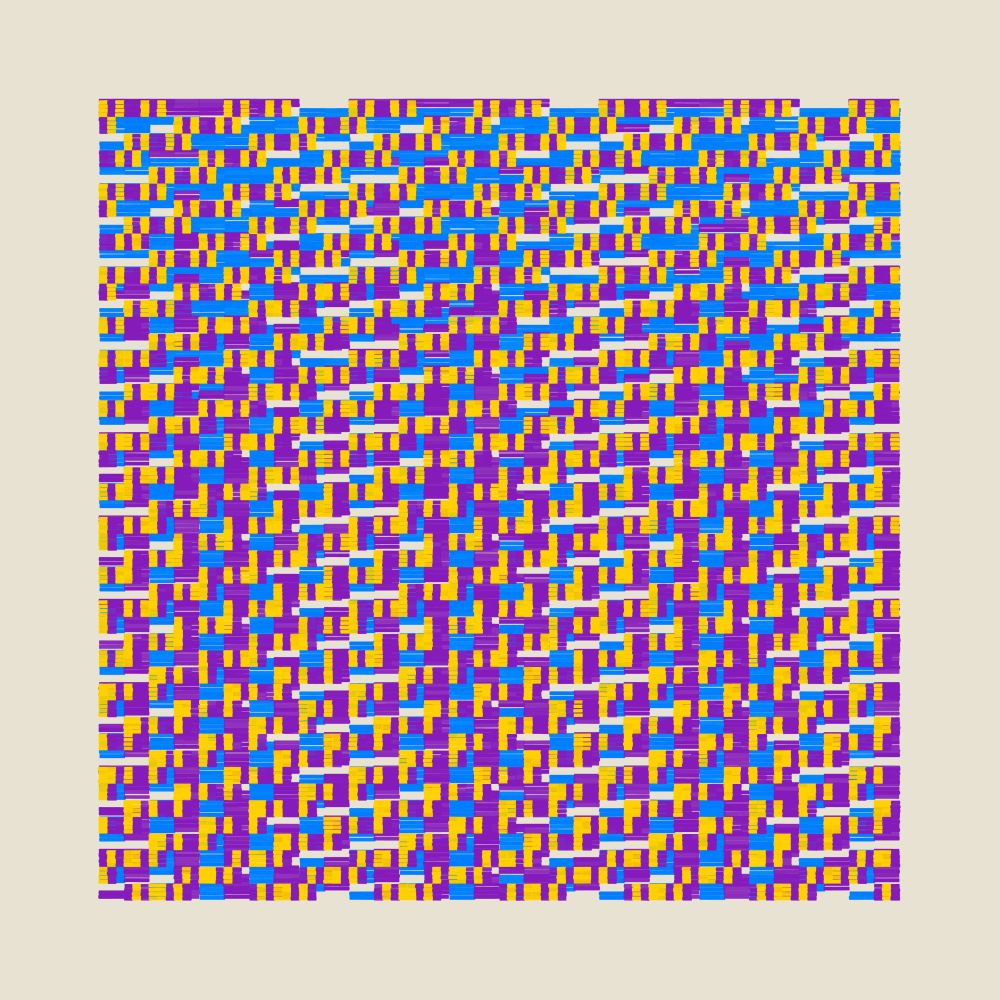

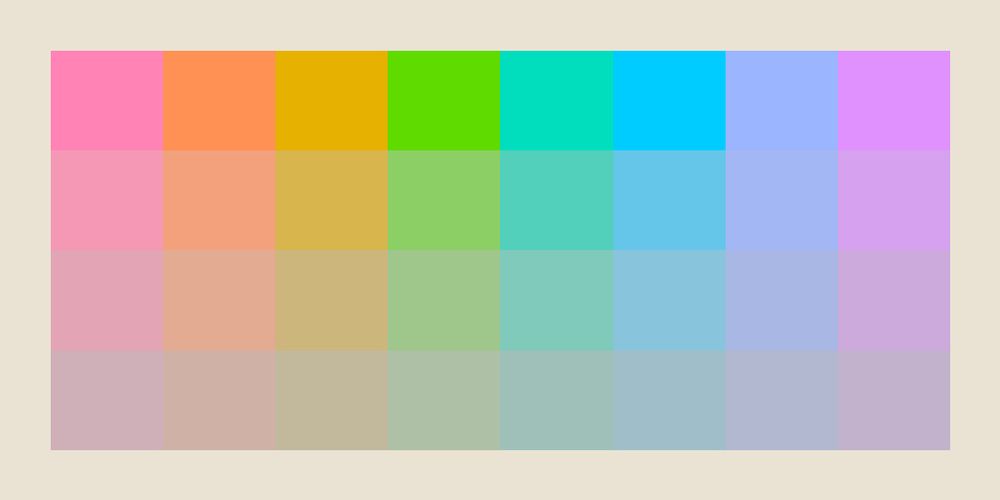

- Generating vibrant palettes with Kubelka-Munk pigment mixing, using 5 primaries (blue, yellow, red, white, black). The routine selects 2 pigments and a random concentration of the two; although it can extend to higher dimensions by sampling the N-dimensional pigment simplex.

- Here is another selection using permutations of three unique pigments instead of two. This is equivalent to uniform sampling within a 3D simplex, i.e. a tetrahedron. It leads to less purity of any single ink, and is a little lower in overall saturation. (GIF doesn’t work on BlueSky I guess?)

- A couple things that aren’t clear to me yet, is how Mixbox achieves such vibrant saturation during interpolations, and how they handle black & achromatic ramps specifically. I may be struggling to achieve the same because of my “imaginary” spectral coefficients.

- Adding another pigment (dimension) is quite easy with a neural network. Now it predicts concentrations for CMY + white + black, allowing for smooth grayscale ramps and giving us a bit of a wider pigment gamut.

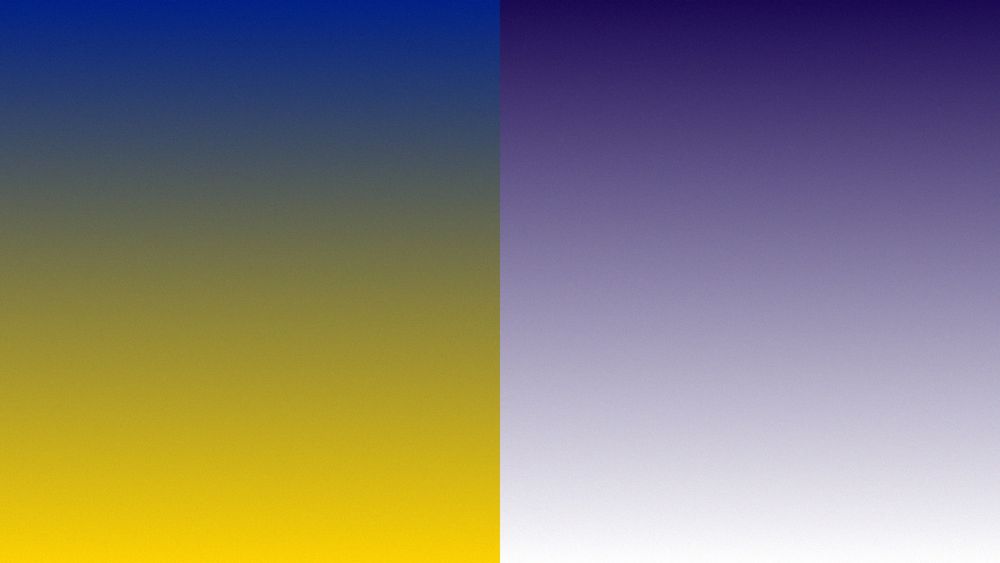

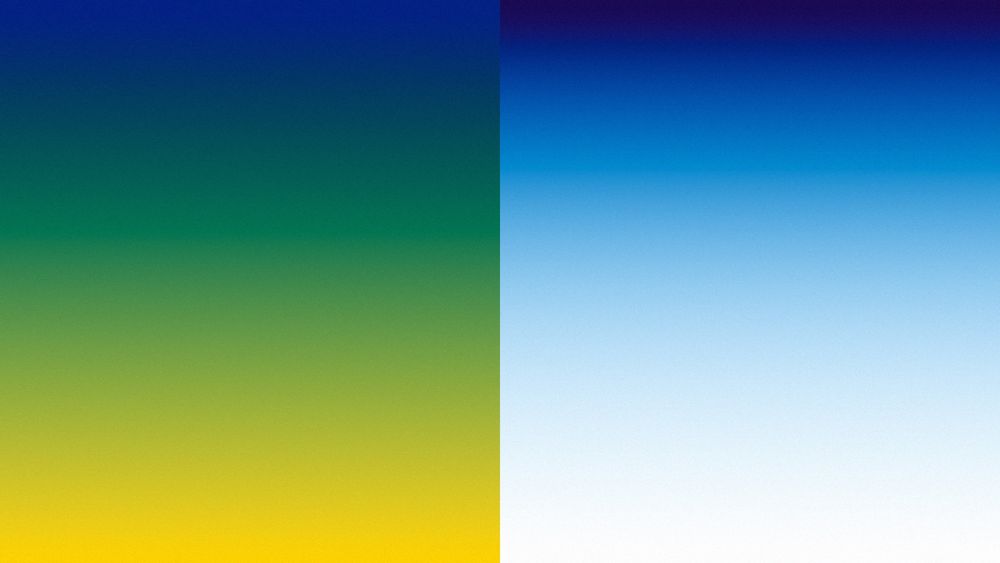

- I like the idea of a neural net as it's continuous, adapts to arbitrary input, and is fast to load and light on memory. However as you can see, it has a hard time capturing the green of the LUT at the beginning (although it doesn’t exhibit the artifacts with the saturated purple input).

- [Not loaded yet]

- [Not loaded yet]

- I think certain dev tools could get away with it—like Vite, or my own canvas-sketch. I think the UX of an electron app may be better for average user but also hurts experienced devs; not just install time but also lack of browser diversity which is crucial if building for the web.

- [Not loaded yet]

- Looks amazing Scott. 👏

- a late #genuary—"gradients only" working on an open source pigment mixing library, based on Kubelka-Munk theory. left: before KM mixing right: after KM mixing

- [Not loaded yet]

- It's closer to Mixbox's implementation; using four primary pigments (each with a K and S curve), and then using numerical optimization to find the best concentration of pigments for a given OKLab input color. It's only running in Python at the moment, but LUT is possible. github.com/scrtwpns/mix...

- Added some plotter and high-res print tools to the open source Bitframes GitHub repo: Tools— print-bitframes.surge.sh Code— github.com/mattdesl/bit...

- [Not loaded yet]

- It just sets the background fill to none at the moment. It would be nice to have more options like per layer exports though.

- Final few hours to mint a Bitframes before the crowdfund closes and edition size is locked. 100% of net proceeds are being directed to a documentary on the history of generative art. 📽️ Closes today at 5PM GMT (UK time). → bitframes.io

- Last week to mint and contribute to the Bitframes crowdfund! 100% of net proceeds are going to the production of a documentary film on the history of generative & computer art. 🎬 → bitframes.io

- COMPUTER ART IN THE MAINFRAME ERA— A ~40 min interview with professor and computer art history scholar Grant D. Taylor that I conducted during R&D for Bitframes. Listen → bitframes.io/episodes/1

- Thank you Marcin for supporting the project & film! ❤️

- Got bitframes #1199 to support the www.generativefilm.io project bitframes.io/gallery/toke...

- Bitframes #1153 (bitframes.io by @mattdesl.bsky.social)

- Woah ! This is a fantastic output. Nice one. 👏

- [This post could not be retrieved]

- Very nice collection! Love the minimalism of it.

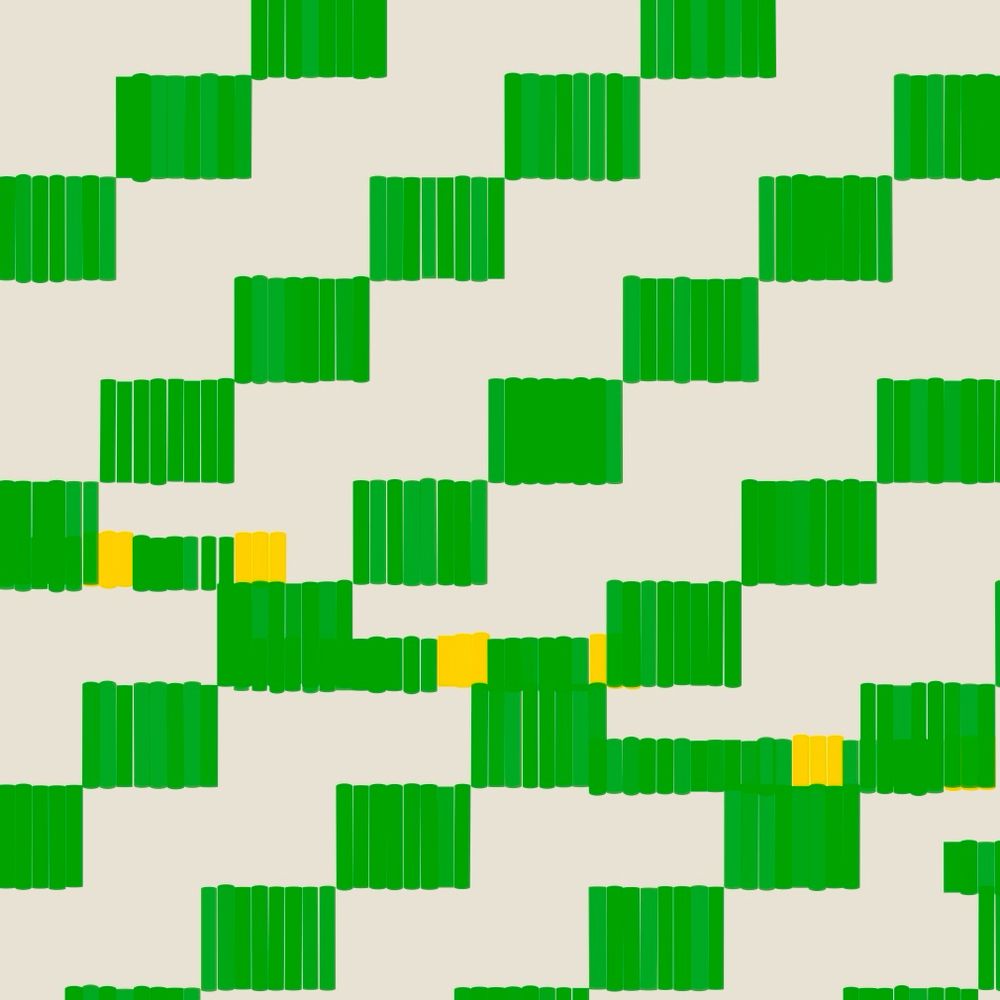

- Small detail in Bitframes—cells are filled by many horizontal & vertical hatch marks. I turn each of these marks into a 2D path to control the roundness of the end caps, rather than being stuck with the very circular arcs of Canvas2D lines. Code below: github.com/mattdesl/bit...

- Some Bitframes tech/webdev notes: I ended up dropping the workers and offscreen canvas code. I found that sync (main thread) rendering with careful canvas pooling & caching, intersection testing, and rAF-deferred render queues was much simpler, more cross platform, and felt just as smooth.

- I also ended up dropping IndexedDB, in place of simple localStorage. It was adding hundreds of milliseconds per operation; surprisingly poor performance for a modern web API! And localforage was my largest dependency, so it was nice to strip that out.

- Display P3 seems to be working alright so far. I’m not sure if anybody noticed a “slightly more punchy yellow” and such, but it’s there. 😀 But I did need to wrap canvas rendering with extra save()/restore() otherwise it would fail to draw lines in Safari.

- Nearly 500 mints already! Thanks for all the support so far. ❤️ See them all: bitframes.io/gallery

- BITFRAMES— an open source generative artwork & film crowdfund, powered by Highlight. 100% of net proceeds are being directed toward the production of a feature-length documentary film on the history of generative art. → bitframes.io Nov 22 – Dec 20

- Bitframes — website is live! ✨ → bitframes.io An open source generative artwork & documentary film crowdfund powered by Highlight, launching tomorrow (Friday Nov 22, 5PM GMT). 100% of net proceeds are being directed to the film production.

- The project was a huge undertaking—really bringing together a lot of my frontend, backend, and creative coding skills. I plan to add more features over the coming weeks, like GIF and high res print exports. Excited to release this system as open source software & tools for the gen art community!

- Spoke with @monkantony.bsky.social from LeRandom about my next project, Bitframes—a generative artwork and documentary film crowdfund powered by Highlight. Link here: → www.lerandom.art/editorial/ma... Launching this Friday, Nov 22. Will share more details leading up to launch. 👀

- [Not loaded yet]

- Nice drawings. 👍 What app are you using there?

- BITFRAMES — An open source generative artwork and blockchain-based crowdfund inspired by punched cards and the mainframe computing era. More details to come soon! 👀

- First release of @texel/color—a minimal and modern color library for JavaScript. 🎨 Features: - extremely fast - extremely compact - oklab, p3, rec2020, a98rgb, prophoto, srgb + more - gamut mapping + wide gamut support Repo → github.com/texel-org/co...

- Benchmarking a tiny Sobel image filter with JS + WASM. Zig is currently winning in performance, and about as small as JS (or perhaps smaller, in some applications). ⚡️ Rust code is in the repo but haven't added it to the benchmark yet; PRs welcome! → github.com/mattdesl/wasm-bench

- “the cloud” → gist.github.com/mattdesl/87a14b6b5a…

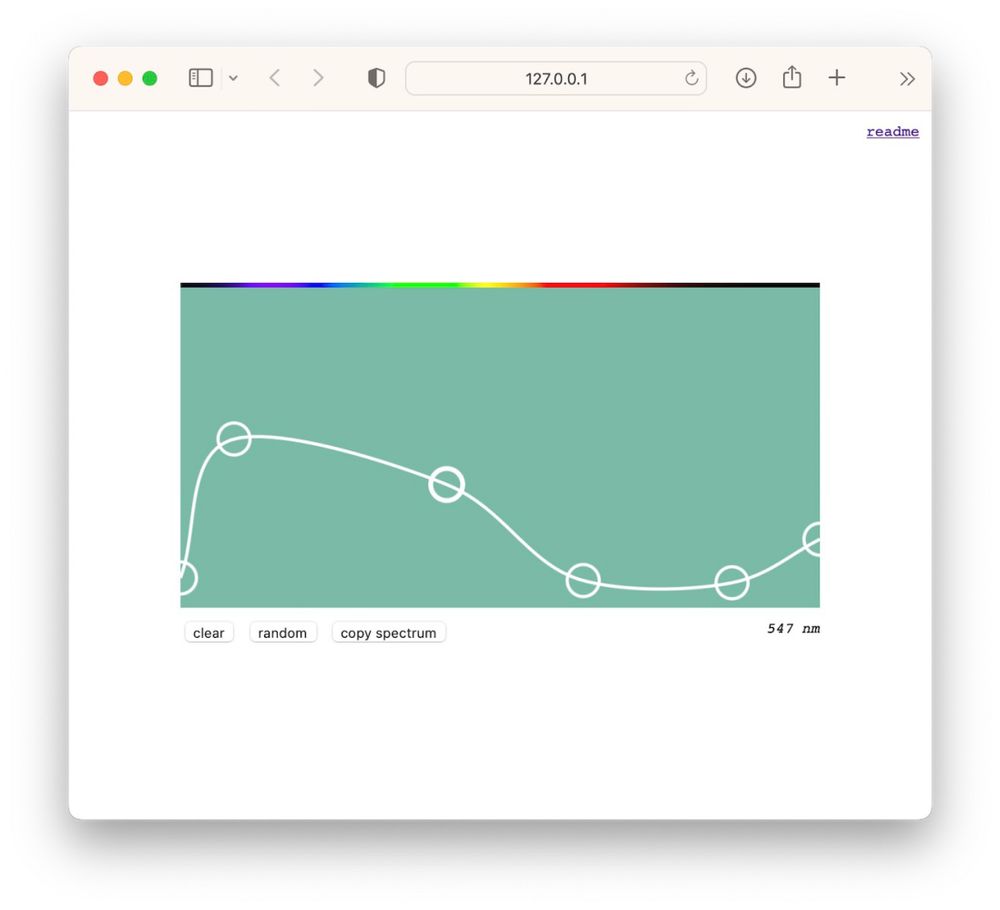

- color-spd — a small learning tool that lets you construct a color from a spectral power distribution, defined as a smooth cubic spline. github.com/mattdesl/color-spd

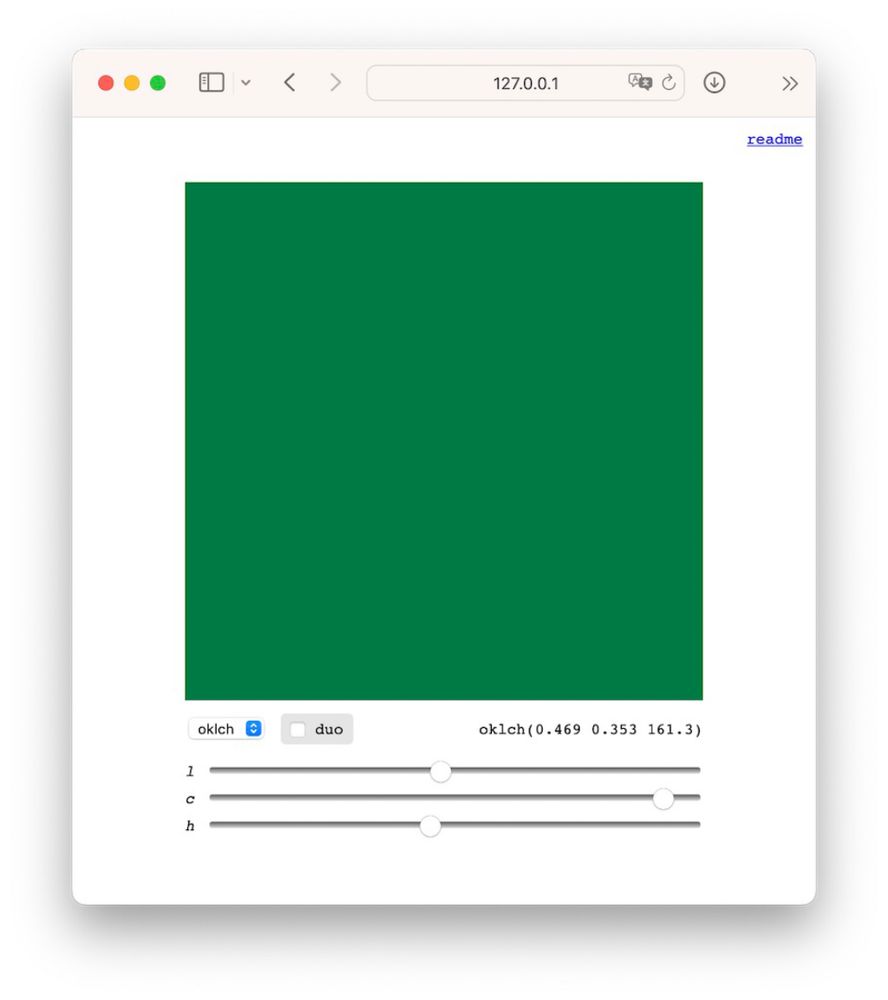

- “color swatch”—a small OSS tool for color interaction across a range of color spaces, desktop only for now. github.com/mattdesl/colorswatch

- another color tidbit: two colors with different spectral power distributions (graphing reflectance at each wavelength) can appear the same to humans because our 3 cones compress the visible spectrum. laser orange might look the same as red + half green. these are known as “metameric colors”.

- in theory, somebody with a 4th cone (tetrachromats) could potentially distinguish between these two spectral distributions—seeing two different colors—where the rest of us with 3 cones would just see a single colour.

- Lots of growth these past few days! Building an open-source network in public is both fun and challenging. Our small team is scrambling to finish long-planned moderation tooling like blocks, and set up our federation sandbox. In the meantime, we’ve made some changes to improve the experience:

- [Not loaded yet]

- Is there a plan to support sign-in somehow in the protocol ? Being able to verify accounts without reliance on data sharing seems a bit critical for the ecosystem to bloom.

- thinking of just using Bluesky to post about all the little tidbits on color I’ve been exploring the last weeks. like tetrachromats—rare in humans and not well studied, but possibly suggests they perceive a wider range of color separation than most! en.m.wikipedia.org/wiki/Tetrachromacy

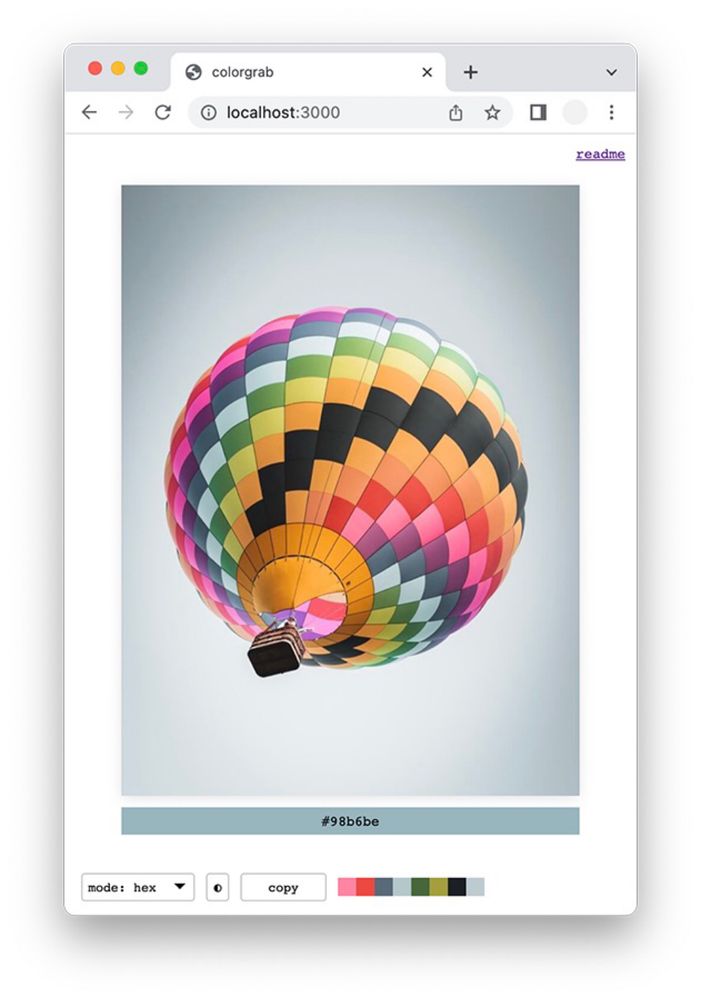

- Open sourcing some of the tools I’ve developed for my upcoming “Generative Color” workshop in Tokyo with Bright Moments. Colorgrab is a minimal desktop interface for grabbing & copying colors from an image as hex and JSON palettes: github.com/mattdesl/colorgrab

- would be great to have some customizable feeds, e.g. an optional “smoothing” filter to spread out the distribution of accounts and voices you are shown. It feels like on every social media platform the quiet voices are lost in a sea of super-posters.

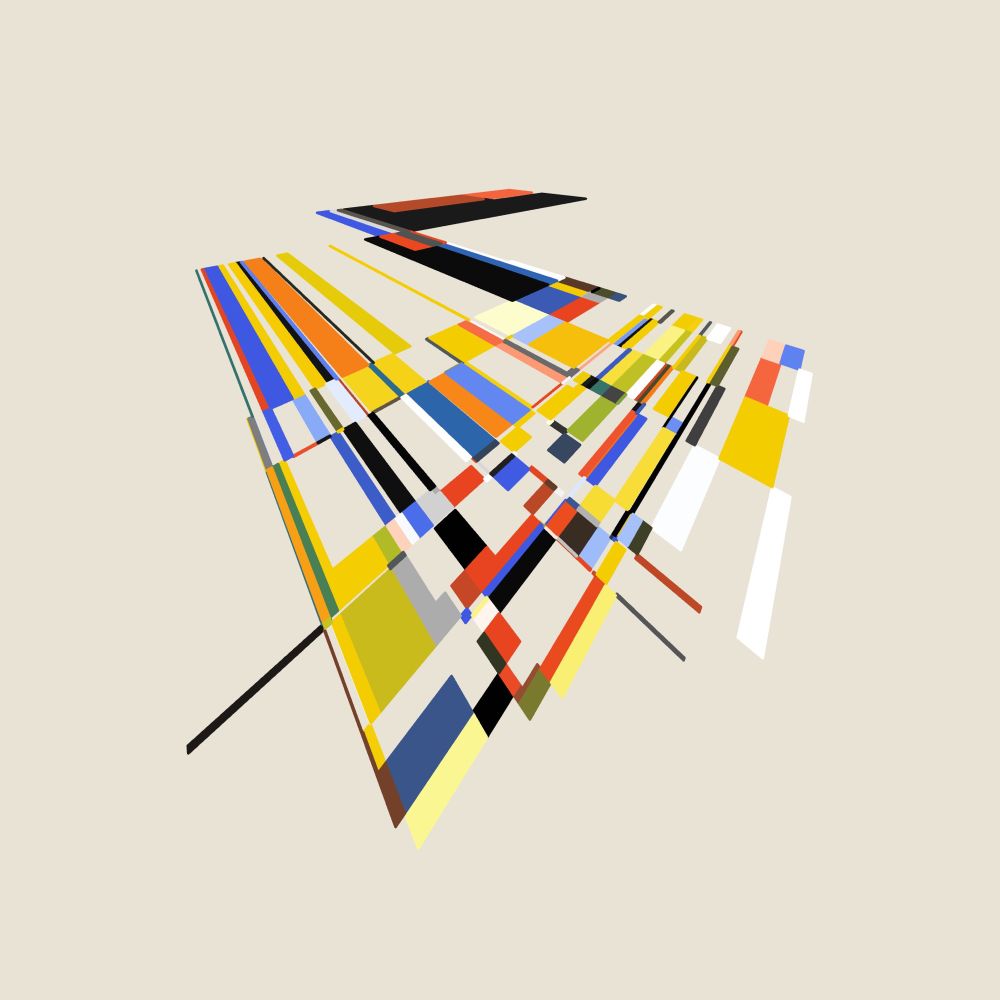

- planar graphs — experiments in color mixing with spectral.js, emergent colour palettes from a limited set of three primaries. colour studies as part of developing a new generative art workshop for Bright Moments Tokyo.

- this is also an attempt to disentangle the underlying architecture of Figma’s “vector networks” by constructing a cycle basis for a straight-line planar graph. something I could imagine using for many other geometries and compositions.

- so is Bluesky social graph truly portable? Up to follows/followers, posts, and muted accounts? I imagine things like media hosting must be centralized to Bluesky servers…

- [Not loaded yet]

- 👋 hey!

- hello world 👋