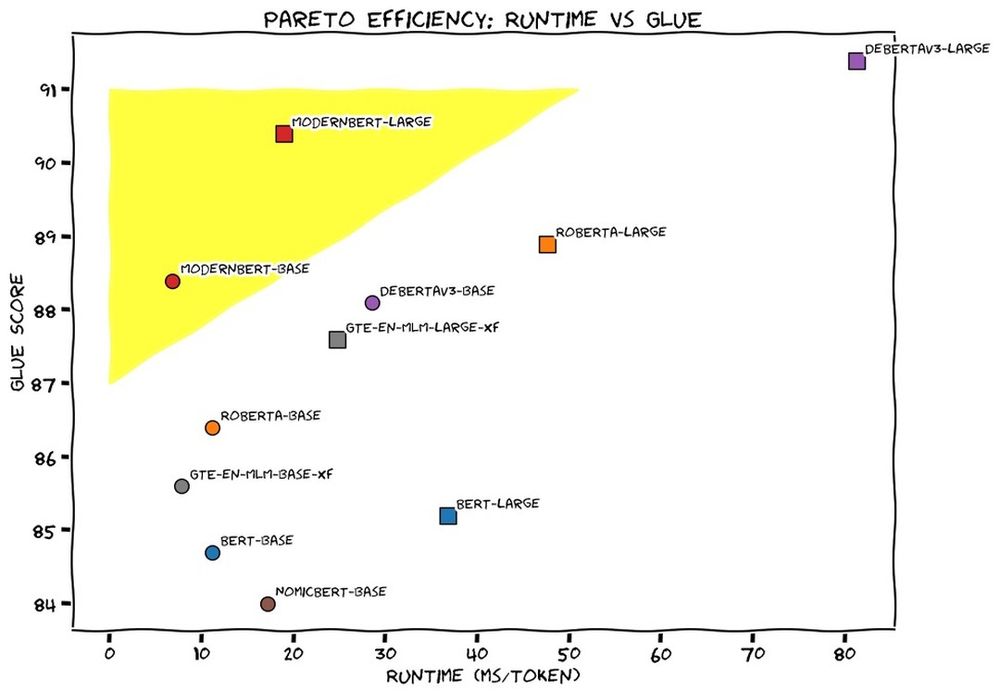

- This week we released ModernBERT, the first encoder to reach SOTA on most common benchmarks across language understanding, retrieval, and code, while running twice as fast as DeBERTaV3 on short context and three times faster than NomicBERT & GTE on long context.Dec 22, 2024 06:12

- ModernBERT is available to use today on Transformers (pip install from main). More details in our announcement post. huggingface.co/blog/modernb...

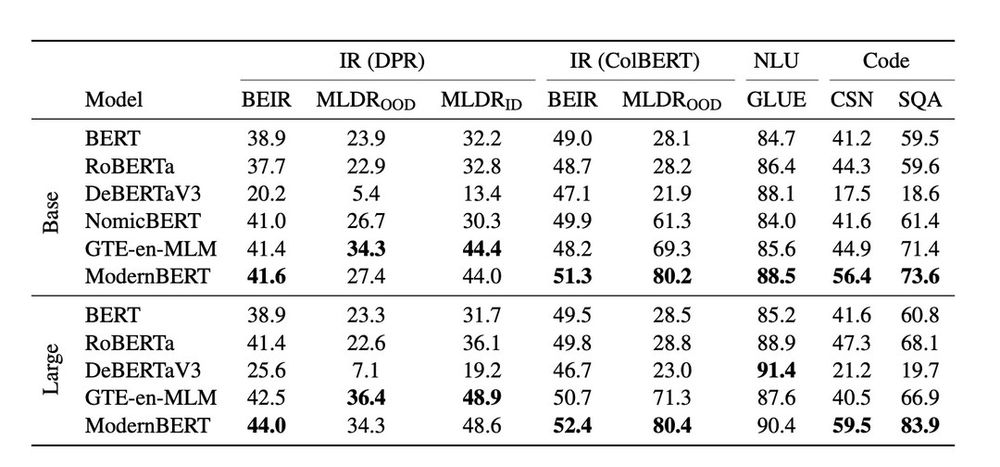

- ModernBERT-base is the first encoder to beat DeBERTaV3-base on GLUE. ModernBERT is also competitive or top scoring on single vector retrieval, ColBERT retrieval, and programming benchmarks.

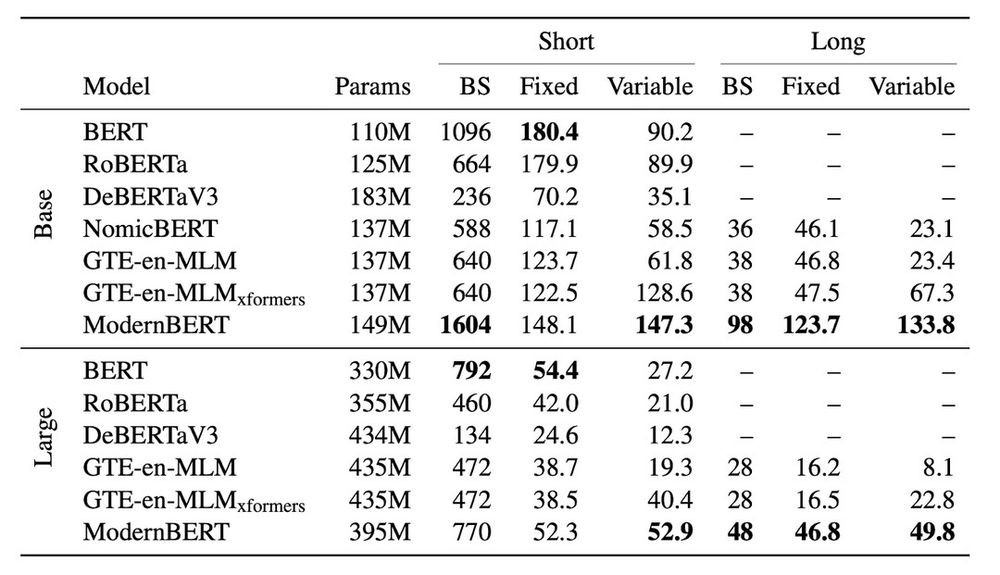

- ModernBERT was designed from the ground up for speed and memory efficiency. ModernBERT is both faster and more memory efficient than every major encoder released since the original BERT.

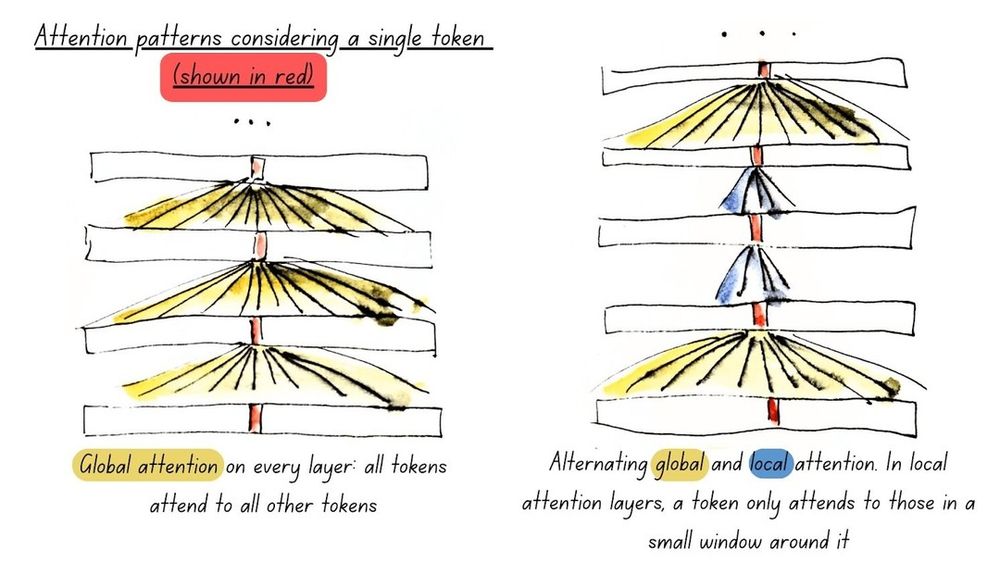

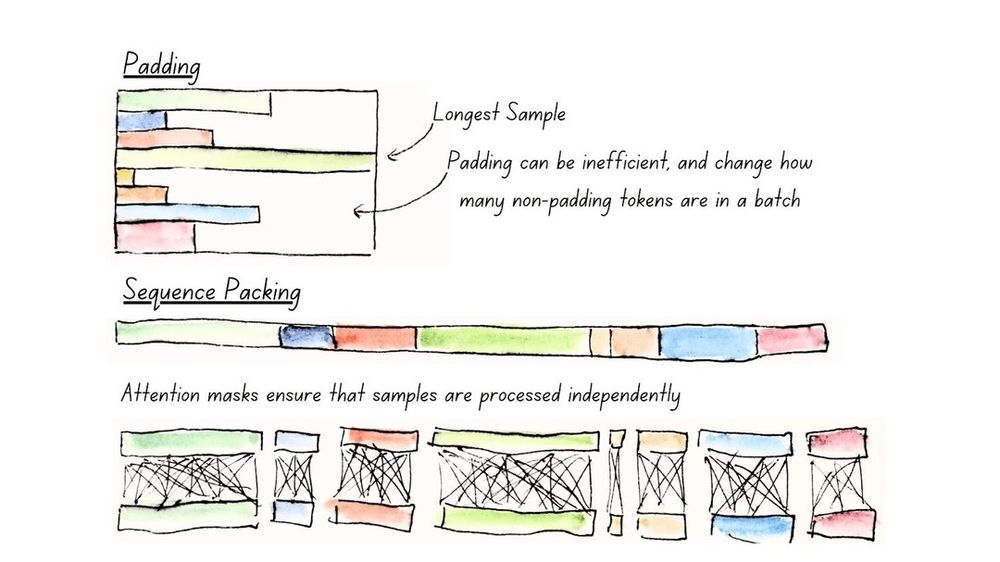

- How did we do it? First, we brought all the modern LLM architectural improvements to encoders, including alternating global & local attention, RoPE, and GeGLU layers, and added full model unpadding using Flash Attention for maximum performance (illustrated in the next post).

- Second, we carefully designed ModernBERT's architecture run to efficiently across most common GPUs. Many common older models don't consider the hardware they will run on and are slower than they should be. Not so with ModernBERT. (Full model sequence packing illustrated below)

- Last, we trained ModernBERT on variety of data sources, including web docs, code, & scientific articles, for a total of 2 trillion tokens of English text & code. 1.7 trillion tokens at a short 1024 sequence length, followed by 300 billion tokens at a long 8192 sequence length.

- For all the model design, training, and evaluation details, check out our Arxiv preprint: arxiv.org/abs/2412.13663

- Thanks to my two co-leads: @nohtow.bsky.social , @bclavie.bsky.social , & the rest of our stacked author cast: @orionweller.bsky.social, Oskar Hallström, Said Taghadouini, Alexis Gallagher, Raja Biswas, Faisal Ladhak, @tomaarsen.com , @ncoop57.bsky.social , Griffin Adams, @howard.fm , & Iacopo Poli

- A big thanks to Iacopo Poli and @lightonai.bsky.social for sponsoring the compute to train ModernBERT, @bclavie.bsky.social for organizing the ModernBERT project, and to everyone who offered assistance and advice along the way. Also h/t to Johno Whitaker for the illustrations.

- I'm looking forward to seeing what you all will build with a modern encoder.

- PS: BlueSky needs to make their really long account tags not count against the character limit.