Manjari Narayan | @Neurostats

AI in Bio & Health & Therapeutic Development

Bio: linktr.ee/mnarayan

Substack: https://blog.neurostats.org

Peek into my brain: notes.manjarinarayan.org

Previously @dynotx @StanfordMed PhD@RiceU_ECE | BS@ECEILLINOIS

🧪🧮⚕️🧬🧠🖥🤖📈✍️🩺👩📈📉

- [Not loaded yet]

- 📌

- [Not loaded yet]

- [Not loaded yet]

- The math for why it works is definitely related for both to answe 'how many measurements do you need to make for this to work?' But they might merely be reinventing that here. But also I totally agree that fractional designs are underutilized in bio experiments.

- This has been directly used in designing experiments to learn fitness landscapes in protein design. But it's possible that others invoke 'CS' branding even if it is just regular regression doing the signal estimation.

- CS is about making something like FFD work in high dimensions and where the sparsity isn't obvious in the canonical basis/representation.

- It's not about fractional factorial designs exactly though compressed sensing did draw out connections to that, group testing etc.. from mid 20th century. I suspect BROAD experimental design folks are calling a lot more things compressed sensing than might be the case though.

- It is primarily about sampling signals such that sparse additive/functional regression can recover the original signal. The key thing being the signal can be dense in ambient space as long as it can be sparsely represented in some other representation. users.soe.ucsc.edu/~afletcher/E...

- [Not loaded yet]

- [Not loaded yet]

- parsimonious doesn't reflect this property for me. A parsimonious representation can be entirely non-lossy in compression. But

- Excellent post. It comes down to making tradeoffs between cost and validity of multiplexed perturbations.

- [Not loaded yet]

- [Not loaded yet]

- Certain parts of science like testing for genes, genes effected by perturbations, hunting for brain regions -> behavior and so forth has made it seems like all exploration is just about multiple "biological unit at a time" hypothesis testing problems.

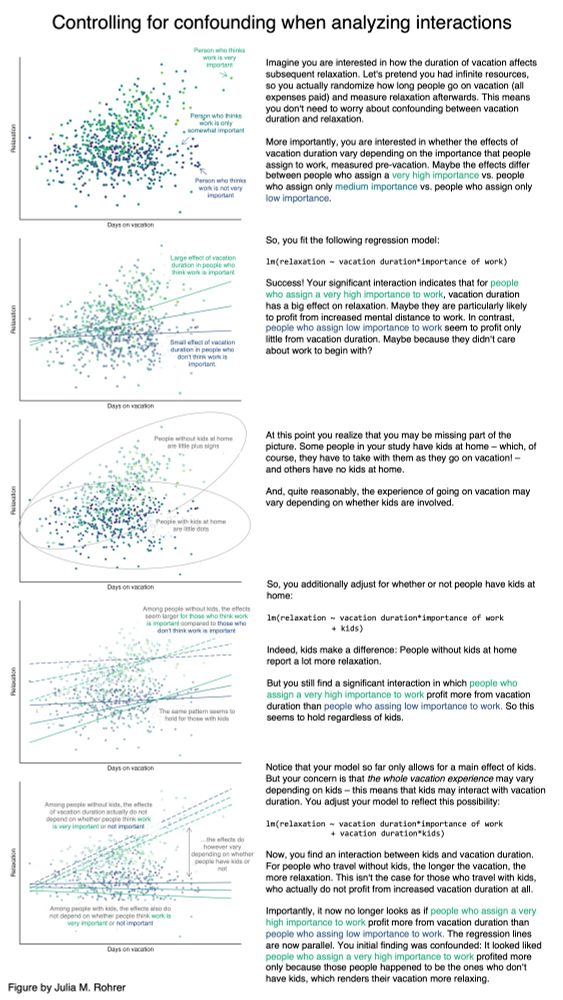

- Thanks to everybody who chimed in! I arrived at the conclusion that (1) there's a lot of interesting stuff about interactions and (2) the figure I was looking for does not exist. So, I made it myself! Here's a simple illustration of how to control for confounding in interactions:>

- Does anybody have a good visualization to explain how interactions can be confounded, and why interactions require interaction controls? @urisohn.bsky.social maybe? (Asking because I have an idea, but want to check out what exists already before investing the effort)

- [Not loaded yet]

- Yes, I kept bringing it up by psychiatry and neuroimaging are too influenced by mediator-moderator terminology. Same thing is true in protein science and in systems pharmacology! Where statistical and casual interactions are indistinguishable

- [Not loaded yet]

- [Not loaded yet]

- 📌

- [Not loaded yet]

- [Not loaded yet]

- I stopped having any engagement there since 2021-2022, before which it was amazing. Even paying for premium+ made no difference and they didn't approve it for a year.

- [Not loaded yet]

- [Not loaded yet]

- Cool I didn't know if this 📌

- [Not loaded yet]

- [Not loaded yet]

- 📌

- [Not loaded yet]

- [Not loaded yet]

- The question is do physicists like the way non-physicists use those words? I made a whole presentation trying to explain that their notion of optimality was not really statistically optimal. 🤦🏽♀️

- [Not loaded yet]

- [Not loaded yet]

- In astronomy most people are working with the same expensive to collect datasets. There is much less publication bias. That alone makes scientific process more efficient. The number of 'knobs', levels of causation, unknowns is just much larger in bio. Funding pressures are very different in bio.

- [Not loaded yet]

- I had an applied math background when I sat in on my first cognitive neuroscience course around 2011 and my brain exploded. All sorts of things were called optimal but they wouldn't be to a statistician, maximum likelihood was used to argue for bayesian hypotheses, it was terribly confusing.

- [Not loaded yet]

- 📌

- Methodolatry, I love this word. #Metascience #Metasky

- Perfunctory science. I recall coming up with that when brainstorming alternatives to 'cargo cult' with @irisvanrooij.bsky.social

- But some sciences do truly have multiple independent observations with entirely different theories / orthogonal biases and so forth. The faster they have access to those the better. It is also a difference between in human vs. in-vitro/in-vivo research.

- I was a quant in human neuroscience and then a quant in protein science, it was totally different. Knowing that your results might fail to replicate in an experiment 4 months later makes you less likely to cling to a result, and you kill your darlings a lot more quickly.

- [Not loaded yet]

- Part of this explanation doesn't work, e.g astronomy is strictly observational but doesn't have the same issues. It isn't really about isolating in a reductionist system. That is an illusion in most parts of networked biological systems anyway.

- It is a heavy relaxation of the word 'p-hacking'. I think he means large researcher degrees of freedom / garden of forking paths sites.stat.columbia.edu/gelman/resea...

- I read methodological anarchy sort of in the vein of Sander Greenland's interpretation. That all 'optimal' things breakdown somewhere, and that it is actually okay to use your judgement and deviate from it. Related to one of my favorite lectures from Huber.

- In my experience the methods police is usually not even technically right. They might obsess about a minor statistical problem because it is in the rules, when lack of independence is the most salient issue. I don't know if that is what he means here. Will have to read it.

- [Not loaded yet]

-

View full threadI've seen this first hand in parts of protein design and in biomarkers/precision medicine. And if the pattern isn't obvious it might be due to a poor similarity metric.

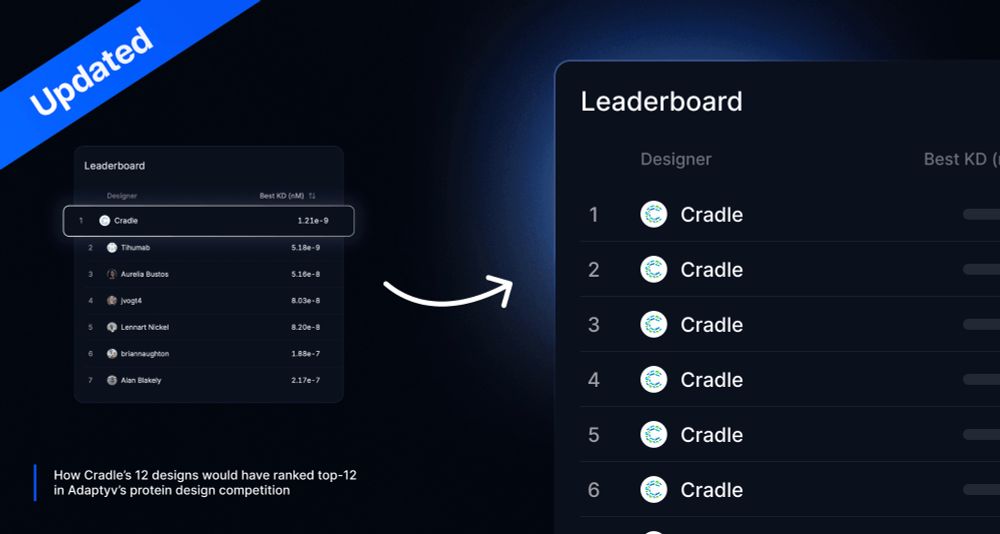

- This competition was a rare instance of proving the false negative rate of virtual predictions. A design that scored poorly via virtual scores won the competition. But without data like this, everyone is both wildly optimistic and thinks criticisms are too harsh. www.cradle.bio/blog/adaptyv2

- [Not loaded yet]

- 📌 This is great, I like to collect these examples.

- [Not loaded yet]

- So feyerabendesque?

- [Not loaded yet]

- This is the Pearlian way of looking at it. If GPTs learn to predict experimental results or seemingly counterfactual predictions, it is because those patterns were already in the training data, the right nonparametric model waiting to be learned.

- But don't you get a lot of pushback against this? E.g look at __ performance on __ reasoning benchmarks, look at performance of virtual screens or denovo drug designs, look at biodiscovery agents etc...

- [Not loaded yet]

- Oh didn't know that. I'll have to find out if it means what I think it means.

- [Not loaded yet]

- 📌

- [Not loaded yet]

- [Not loaded yet]

- 📌

- [Not loaded yet]

-

View full threadUp until now we actually had a system where medical and statistical reviewers very rigorously weighed the evidence and told drug developers, no your evidence isn't good enough. But the decision makers look at the full picture can still make the call to conditionally approve a drug. 3/n

- Value-laden decision making didn't hampered the ability of the FDA to ruthlessly weighing the evidence. On the other hand, this was not true for drug developers who often present the same evidence in much better light, or even the best medical journals like NEJM who fail to find the problems 4/n

- How you treat a case like decision making to achieve certain extra-scientific outcomes vs. rigor of evidence. The way accelerated approvals work for approving novel therapies falls into this and I would argue implicitly results in values rightly influencing thresholds of decision-making. 1/2

- It can and should be the case that we apply different thresholds to the causal and statistical evidence for surrogate endpoints, incorporating non-epistemic concerns like enabling future innovation, consequences of delaying a plausibly effective treatment matter.

- Philosophy has jumped the shark. If anyone in the philosophy community wonders why their neuroscientist colleagues don’t pay them any mind, read this paper. bsky.app/profile/zoed...

- [Not loaded yet]

- 😂 I never thought of the screening property this way

- [Not loaded yet]

- Yes, I've noticed that MDs are very prone to absence of evidence as evidence of absence. But as a science, we are still very immature about evidential rigor outside of RCTs. Not impossible but an actually harder regime. But even RCTs aren't the ideal experiments people think, so 🤷♀️

- [Not loaded yet]

- Well an MD once told me my talk about casual inference for novel neuromodulation experiments was 'philosophy'. Biologists are so empirical, many don't even think statistical science or theory of measurement is 'science'.

- [Not loaded yet]

-

View full threadThis problem with representations has been going on for a while though. The problem is most neuroscientists don't consider issues or identifiability in their research designs. Technically they have some equivalence class that the observed neural representations are part of. 1/n

- It's possible to confirm its existence by intervening in the ways that they do but they haven't verified if those representations are consistent with other conditions not measured and so forth. That has been changing recently as neuroscience is getting more embodied and ethological. 2/n

- [Not loaded yet]

- 📌

- Wow! She lived till 104. #MathSky

- [Not loaded yet]

- [Not loaded yet]

- 📌