I spoke to

@washingtonpost.com about how bad actors are "grooming" LLMs with disinformation.

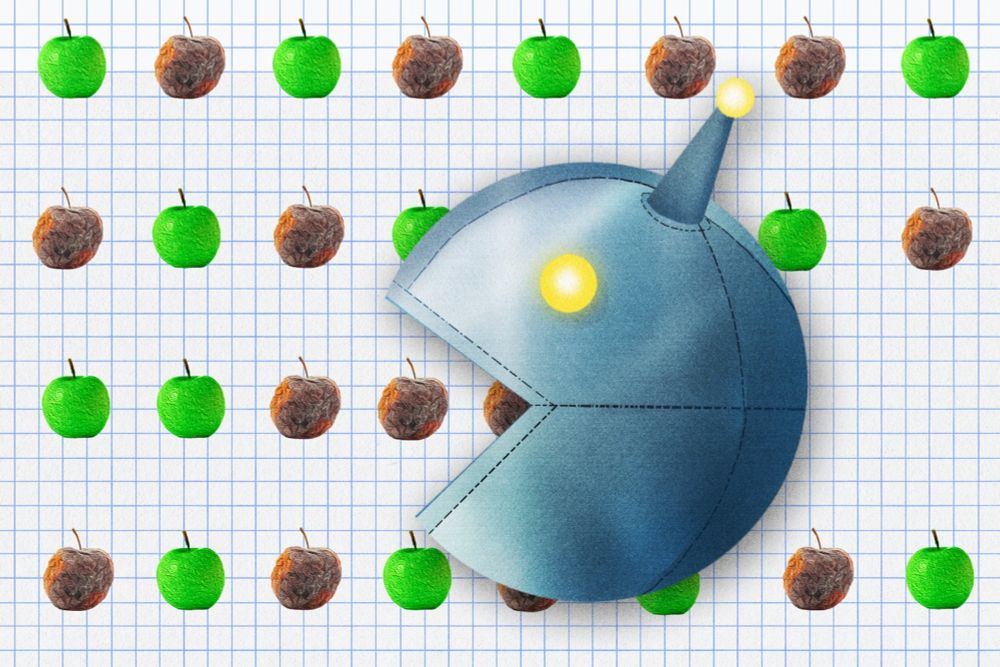

Most chatbots struggle with disinformation, which worsens with search-augmented systems prioritizing recent information. The more real-time these models become, the more vulnerable they are to manipulation.

Russia seeds chatbots with lies. Any bad actor could game AI the same way.

In their race to push out new versions with more capability, AI companies leave users vulnerable to “LLM grooming” efforts that promote bogus information.

Apr 18, 2025 09:53