Gabriel Martín Blázquez

ML Engineer @hf.co 🤗 Building tools for you to take care of your datasets like Argilla or distilabel!

- SmolLM2 paper is out! We wrote a paper detailing the steps we took to train one of the best smol LM 🤏 out there: pre-training and post-training data, training ablations and some interesting findings 💡 Go check it out and don't hesitate to write your thoughts/questions in the comments section!

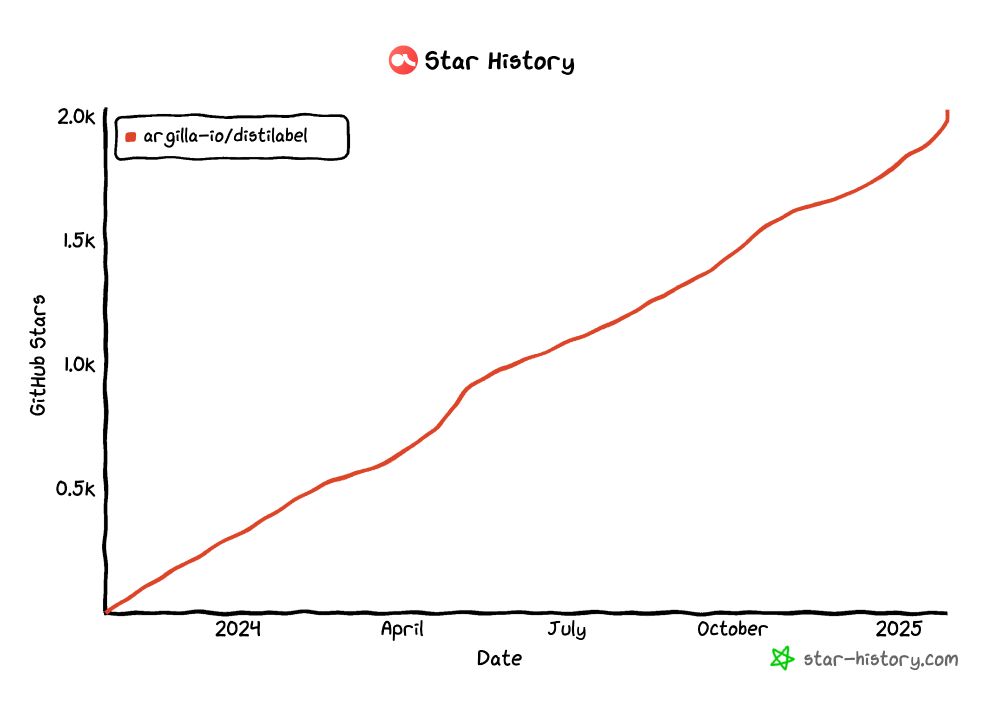

- distilabel ⚗️ reached the 2k ⭐️ on GitHub!

- Reposted by Gabriel Martín BlázquezWe are reproducing the full DeepSeek R1 data and training pipeline so everybody can use their recipe. Instead of doing it in secret we can do it together in the open! Follow along: github.com/huggingface/...

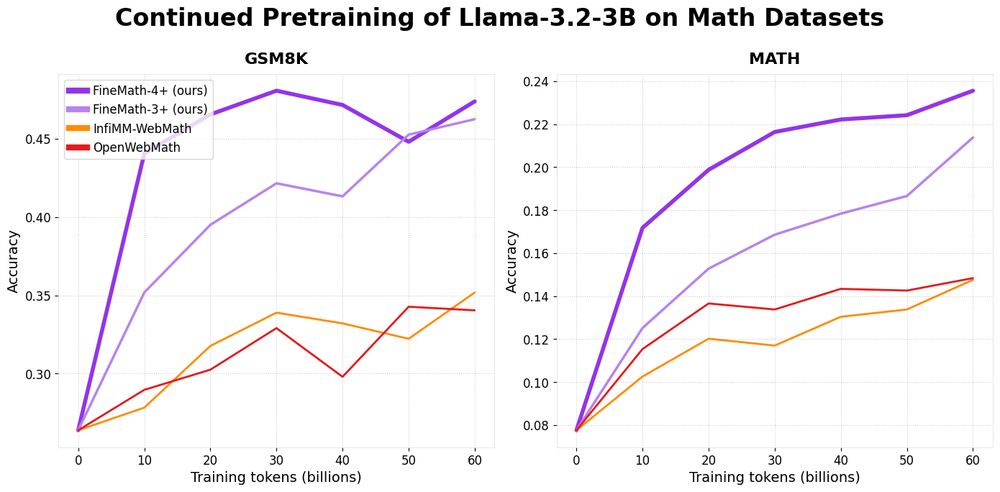

- Reposted by Gabriel Martín BlázquezIntroducing 📐FineMath: the best open math pre-training dataset with 50B+ tokens! Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH. 🤗 huggingface.co/datasets/Hug... Here’s a breakdown 🧵

- Reposted by Gabriel Martín Blázquez🚀 Argilla v2.6.0 is here! 🎉 Let me show you how EASY it is to export your annotated datasets from Argilla to the Hugging Face Hub. 🤩 Take a look to this quick demo 👇 💁♂️ More info about the release at github.com/argilla-io/a... #AI #MachineLearning #OpenSource #DataScience #HuggingFace #Argilla

- How many regular expressions have you written without the help of an LLM since ChatGPT appeared?

- Reposted by Gabriel Martín Blázquez[Not loaded yet]

- Reposted by Gabriel Martín BlázquezFor anyone interested in fine-tuning or aligning LLMs, I’m running this free and open course called smol course. It’s not a big deal, it’s just smol. 🧵>>

- It's just me or the latest Claude 3.5 Sonnet is too prone to generate code when asking technical questions not directly related to coding?

- Reposted by Gabriel Martín BlázquezLet's go! We are releasing SmolVLM, a smol 2B VLM built for on-device inference that outperforms all models at similar GPU RAM usage and tokens throughputs. SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

- As part of the SmolTalk release, the dataset mixture used for @huggingface.bsky.social SmolLM2 model, we built a new version of the MagPie Ultra dataset using Llama 405B Instruct. It contains 1M rows of multi-turn conversations with diverse instructions! huggingface.co/datasets/arg...

- Reposted by Gabriel Martín Blázquez[Not loaded yet]

- Reposted by Gabriel Martín Blázquez[Not loaded yet]

- Reposted by Gabriel Martín BlázquezMaking SmolLM2 more reproducible: open-sourcing our training & evaluation toolkit 🛠️ github.com/huggingface/... Pre-training & evaluation code, synthetic data generation pipelines, post-training scripts, on-device tools & demos Apache 2.0. V2 data mix coming soon! Which tools should we add next?

- Excited to announce the SFT dataset used for @huggingface.bsky.social SmolLM2! The dataset for SmolLM2 was created by combining multiple existing datasets and generating new synthetic datasets, including MagPie Ultra v1.0, using distilabel. Check out the dataset: huggingface.co/datasets/Hug...

- Reposted by Gabriel Martín Blázquez[Not loaded yet]