Taylor Webb

Studying cognition in humans and machines scholar.google.com/citations?user=WCmr…

- Reposted by Taylor Webb[Not loaded yet]

- Reposted by Taylor WebbTop-down feedback is ubiquitous in the brain and computationally distinct, but rarely modeled in deep neural networks. What happens when a DNN has biologically-inspired top-down feedback? 🧠📈 Our new paper explores this: elifesciences.org/reviewed-pre...

- Reposted by Taylor Webb[📄] Are LLMs mindless token-shifters, or do they build meaningful representations of language? We study how LLMs copy text in-context, and physically separate out two types of induction heads: token heads, which copy literal tokens, and concept heads, which copy word meanings.

- New work out now in TMLR: Evaluating Compositional Scene Understanding in Multimodal Generative Models. We find that multimodal models have improved in their abilities to both generate and interpret compositional visual scenes, but still lag behind humans. arxiv.org/abs/2503.23125

- Reposted by Taylor WebbReasoning Algorithms: Online workshop open to all, with a bunch of speakers from cognitive development, primate cognition, neuroscience, and computational modeling 🧪🧠 obssr.od.nih.gov/news-and-eve...

- Reposted by Taylor Webb[Not loaded yet]

- Reposted by Taylor WebbPhD position in cognitive computational neuroscience! Join us, & investigate how we can endow domain-specific models of vision (eg DNNs) with domain-general processes such as metacognition or working memory. All details => www.kuleuven.be/personeel/jo... #PsychSciSky #Neuroscience #Neuroskyence

- Reposted by Taylor WebbWhy Tononi et al's defense of IIT fails to convince me. medium.com/@kording/86f...

- I am happy to be a signatory to this updated critique of the integrated information theory (IIT) of consciousness. Despite much media attention, I agree that its 'core claims are untestable even in principle' and it is therefore unscientific. www.nature.com/articles/s41...

- Reposted by Taylor WebbNew version of "the letter" in Nature Neuroscience. Like many others in the field, I signed because I believe that IIT threatens to deligitimize the scientific study of consciousness: www.nature.com/articles/s41....

- LLMs have shown impressive performance in some reasoning tasks, but what internal mechanisms do they use to solve these tasks? In a new preprint, we find evidence that abstract reasoning in LLMs depends on an emergent form of symbol processing arxiv.org/abs/2502.20332 (1/N)

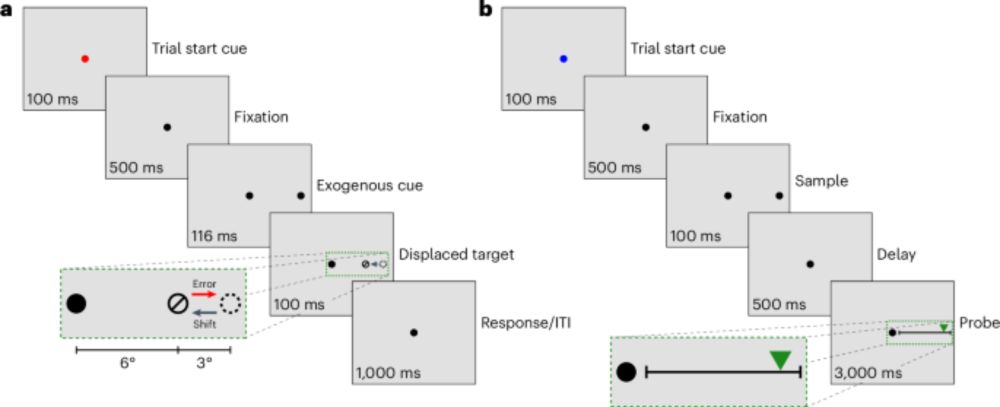

- Reposted by Taylor WebbI’m very excited to finally see this one in print! Led by the incomparable @brissend.bsky.social www.nature.com/articles/s41... We find that cognitive processes (e.g. attention, working memory) undergo error-based adaptation in a manner reminiscent of sensorimotor adaptation. Read on! (1/n)

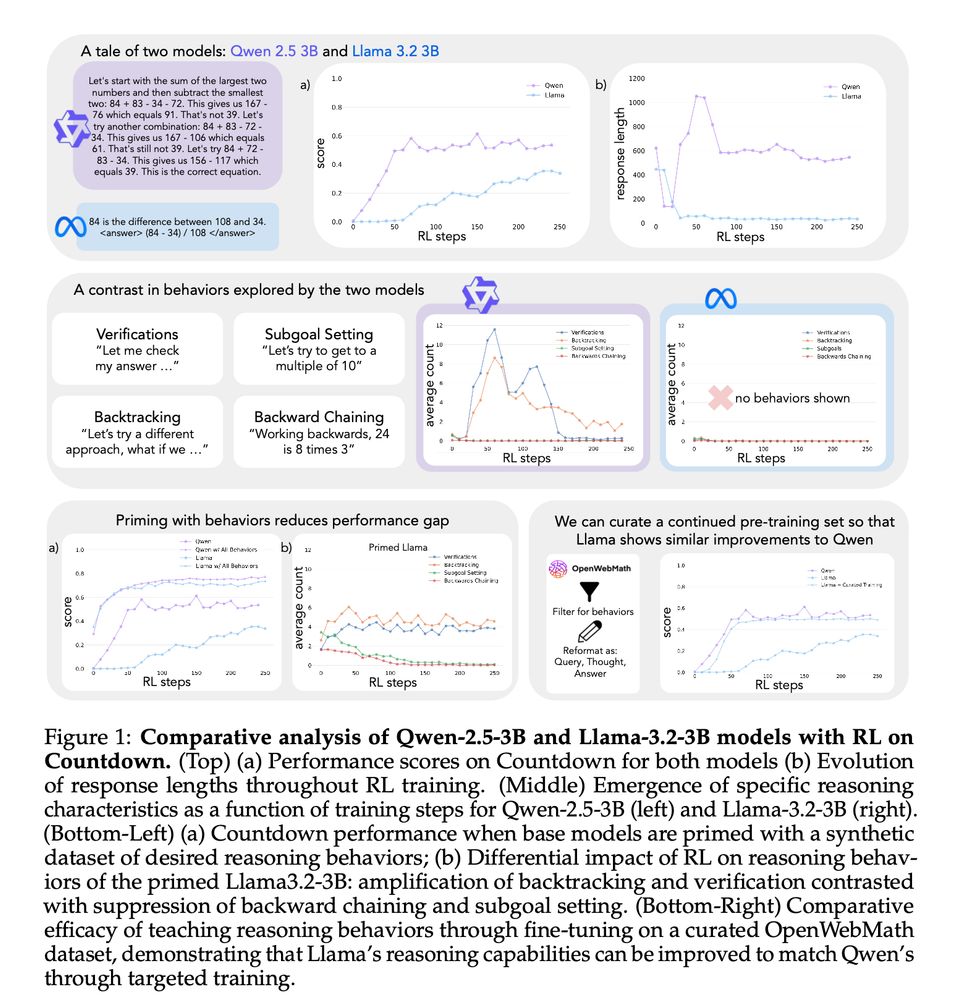

- Reposted by Taylor Webb1/13 New Paper!! We try to understand why some LMs self-improve their reasoning while others hit a wall. The key? Cognitive behaviors! Read our paper on how the right cognitive behaviors can make all the difference in a model's ability to improve with RL! 🧵

- Reposted by Taylor Webbpreprint updated - www.biorxiv.org/content/10.1... Each of us perceives the world differently. What may underlie such individual differences in perception? Here, we characterize the lateral prefrontal cortex's role in vision using computational models ... 1/ 🧠📈 🧠💻

- Reposted by Taylor Webbvision+language people: Does anyone have a good sense why most of the recent sota VLMs now have a simple MLP as the mapping network between vision and LLM embeddings? Why does this work better, is learning more efficient? Slowly over time people dropped the more elaborate Q-Former/Perceiver arch

- Reposted by Taylor WebbKey-value memory is an important concept in modern machine learning (e.g., transformers). Ila Fiete, Kazuki Irie, and I have written a paper showing how key-value memory provides a way of thinking about memory organization in the brain: arxiv.org/abs/2501.02950

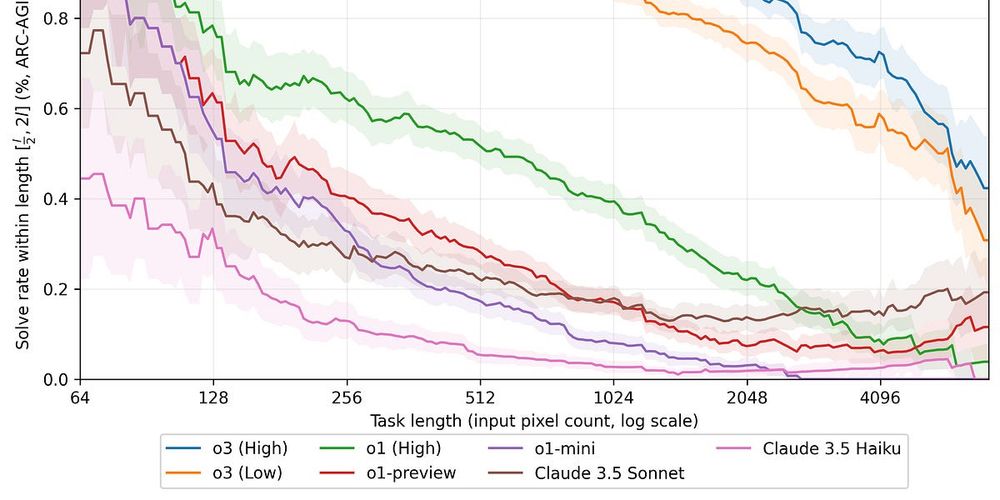

- Very nice analysis of the important role that visual perception plays in ARC problems. The ability of LLMs to solve these problems is dramatically affected by their size, even for identical problems. anokas.substack.com/p/llms-strug...

- These are some truly incredible results. It is of course ridiculous to try and pretend like this model is really doing program synthesis (and thus previous claims about LLMs being an ‘off-ramp to intelligence’ are vindicated). A neural network has now matched average human performance on ARC.

- Reposted by Taylor Webb[Not loaded yet]

- Reposted by Taylor Webb

- Reposted by Taylor WebbIn this new preprint @smfleming.bsky.social and I present a new theory of the functions and evolution of conscious vision. This is a big project: osf.io/preprints/ps.... We'd love to get your comments!

- Reposted by Taylor WebbHow do LLMs learn to reason from data? Are they ~retrieving the answers from parametric knowledge🦜? In our new preprint, we look at the pretraining data and find evidence against this: Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢 🧵⬇️

- Excited to announce that I'll be starting a lab at the University of Montreal (psychology) and Mila (Montreal Institute of Learning Algorithms) starting summer 2025. More info to come soon, but I'll be recruiting at the Masters and PhD levels. Please share / get in touch if you're interested!

- Fascinating paper from Paul Smolensky et al illustrating how transformers can implement a form of compositional symbol processing, and arguing that an emergent form of this may account for in-context learning in LLMs: arxiv.org/abs/2410.17498

- Very excited to share this work in which we use classic cognitive tasks to understand the limitations of vision language models. It turns out that many of the failures of VLMs can be explained as resulting from the classic 'binding problem' in cognitive science.

- (1) Vision language models can explain complex charts & decode memes, but struggle with simple tasks young kids find easy - like counting objects or finding items in cluttered scenes! Our 🆒🆕 #NeurIPS2024 paper shows why: they face the same 'binding problem' that constrains human vision! 🧵👇

- Reposted by Taylor Webb[Not loaded yet]

- Reposted by Taylor Webb1/ Here's a critical problem that the #neuroai field is going to have to contend with: Increasingly, it looks like neural networks converge on the same representational structures - regardless of their specific losses and architectures - as long as they're big and trained on real world data. 🧠📈 🧪

- Looking forward to digging into this!

- New theoretical results for anyone interested in SDT, confidence and metacognition! With superstar Wiktoria Łuczak who worked on this as an undergraduate, and first presented it in a talk at CCN that brought the house down arxiv.org/abs/2410.18933 #neuroskyence #PsychSciSky

- Reposted by Taylor Webbi plan to move to Korea later this year, & will soon hire at all levels (students, postdocs, staff scientists, junior PIs) - docs.google.com/document/d/1... my lab in Japan will run till at least 2025. my job has been nothing but the dream job. but hopefully the above explains it. #neuroscience

- Reposted by Taylor WebbNew results! Led by Brad Love and crew. Higher cortical areas radically reconfigure to stretch representations along task-relevant dimensions. It sure sounds like something they would do, doesn't it? #neuroscience www.biorxiv.org/content/10.1...

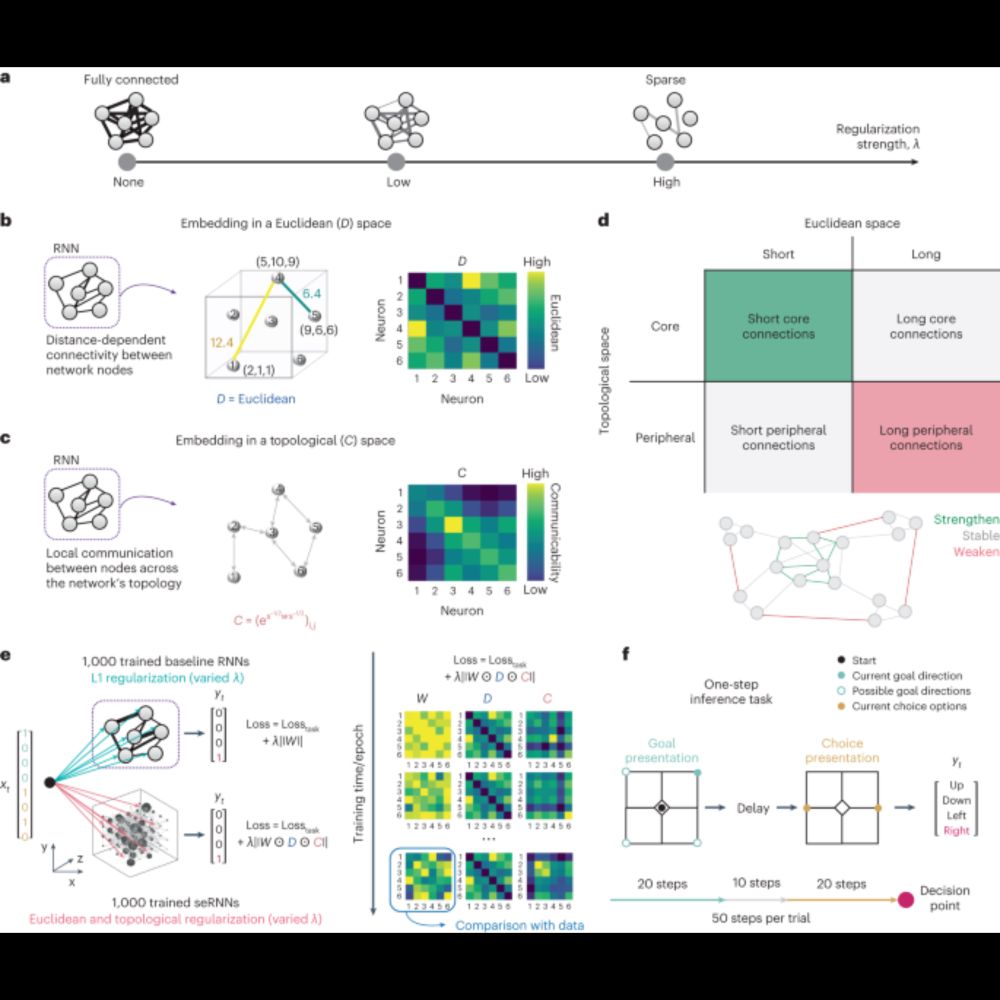

- Reposted by Taylor WebbTwo years ago @danakarca.bsky.social & I wondered: Could the features we observe in brains across species be caused by shared functional, structural & energetic constraints?🧠⚡️ With our spatially embedded RNN we show this is true! www.nature.com/articles/s42... 🧵 #neuroscience #compneuro #NeuroAI

- Reposted by Taylor WebbNow out in Psychological Review, with Andre Beukers, Maia Hamin, and Jon Cohen: our model of how episodic memory can support performance on the n-back task, "When working memory may just be working, not memory". Free version here: osf.io/preprints/ps... #psychscisky #neuroskyence

- Reposted by Taylor WebbNew postdoc opportunity to work jointly with @cantlonlab.bsky.social and me to understand cognition across species, age, and culture! cmu.wd5.myworkdayjobs.com/CMU/job/Pitt...

- Reposted by Taylor WebbThe notion that goals are central to human cognition is intuitive, yet learning and decision-making researchers have often overlooked the topic. In this Trends in Cognitive Sciences review, @annecollins.bsky.social and I propose it’s time to start studying goals in their own right 🧠 t.co/He6PIpQdt9

- Reposted by Taylor WebbOne of the enduring mysteries in perceptual decision making is how external sensory features are transformed into internal decisional evidence. In a new preprint led by Marshall Green, we show that artificial neural networks (ANNs) can be used to uncover this transformation. osf.io/preprints/ps...

- Reposted by Taylor WebbDo deep transformer LMs generalize better? In a new preprint we (Sjoerd van Steenkiste, Ishita Dasgupta, Fei Sha, Dan Garrette, & @tallinzen.bsky.social) control for parameter count to show how depth helps models on compositional generalization tasks, but diminishingly so 🧵 jacksonpetty.org/depth

- Reposted by Taylor WebbA colorful and history-rich take on the IIT pseudoscience situation: felipedebrigard.substack.com/p/a-pseudo-r...

- Reposted by Taylor WebbWhat is representational alignment? How can we use it to study or improve intelligent systems? What challenges might we face? In a new paper (arxiv.org/abs/2310.13018 ), we describe a framework that attempts to unify ideas from cognitive science, neuroscience and AI to address these questions. 1/2

- Reposted by Taylor WebbDo large language models solve verbal analogies like children do? Yes, they (kind of) do! arxiv.org/abs/2310.20384

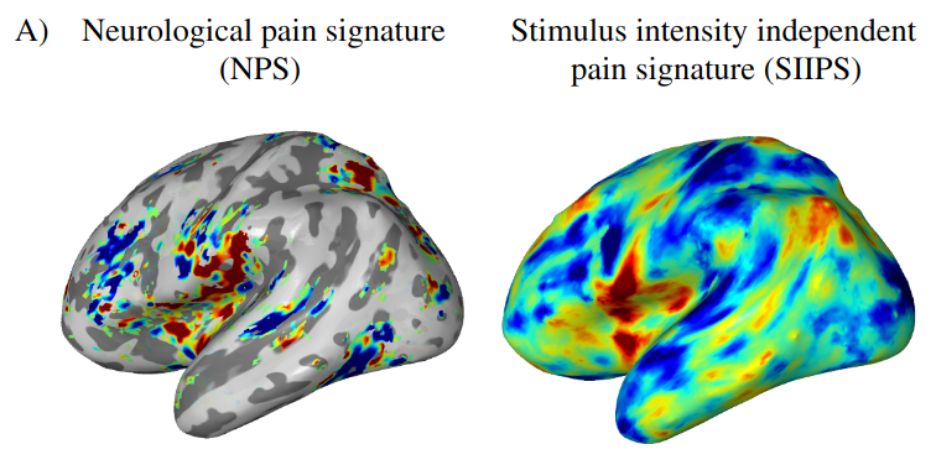

- Reposted by Taylor WebbIn our new decoded neurofeedback study, we used a MNI-space decoder of pain (SIIPS) for the purpose of neuro-reinforcement. We showed that (1) we can modulate SIIPS independently from another decoder of pain intensity (NPS) and (2) have some influence over pain perception #neuroskyence #PsychSciSky

- Reposted by Taylor WebbVery excited to share a substantial updated version of our preprint “Language models show human-like content effects on reasoning tasks!” TL;DR: LMs and humans show strikingly similar patterns in how the content of a logic problem affects their answers. Thread: 1/

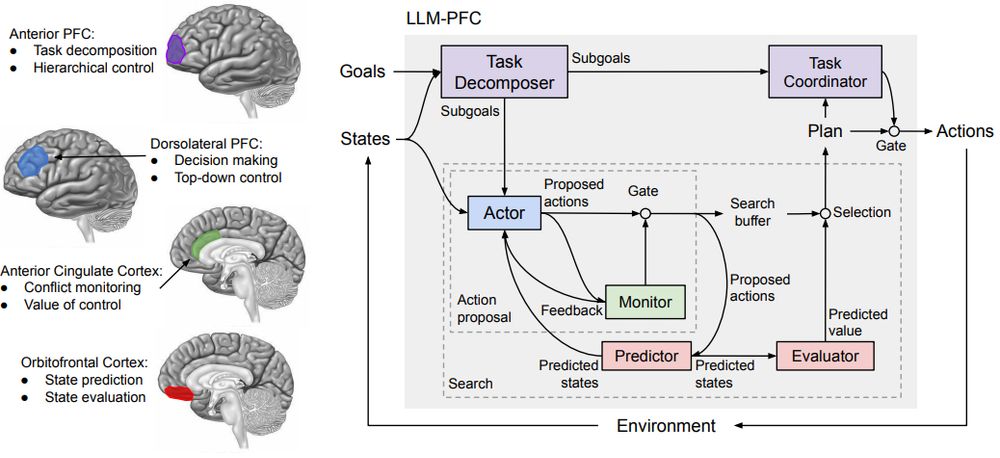

- Very happy to be involved in this project with Shanka Subhra Mondal and @neuroai.bsky.social. We propose a PFC-inspired modular architecture to enable multi-step planning in LLMs.

- Thrilled to share new work w @taylorwwebb.bsky.social Shanka Mondal: A Prefrontal Cortex-inspired Architecture for Planning in Large Language Models (arxiv.org) LLMs struggle w multi-step planning. We propose a solution inspired by brains: planning via recurrent interactions of PFC subregions. 🧵1/n